Introduction

The economic evaluation of health care is a general framework for informing decisions about whether particular health-care technologies represent a cost-effective use of health-care resources. Commonly, the evidence required to inform a decision about the cost-effectiveness of a given set of competing health technologies is not available from a single source. The use of mathematical modeling can be used to support this decision-analytic framework thereby allowing the full range of relevant evidence to be synthesized and brought to bear on the decision problem (Briggs et al., 2006). The process of developing a decision-analytic model is generally seen as being iterative, and requires the model developer to make a substantial number of choices about what should be included in a model and how these included phenomena should be related to one another. These choices take place at every stage of the model development process, and include choices about the comparators to be assessed, choices about which health states and sequences of events will comprise the model’s structure, choices about which evidence sources should be used to inform the model parameters, and choices about statistical methods for deriving the model’s parameters, to name but a few. Importantly the absence of perfect information through which to comprehensively validate a model means that there is rarely a definitive means through which to prospectively determine whether these choices are right or wrong. Instead, model development choices are made on the basis of subjective judgments, with the ultimate goal of developing a model which will be useful in informing the decision at hand.

Therefore, model development is perhaps best characterized as a complex process in which the modeler, in conjunction with other stakeholders, determines what is relevant to the decision problem (and at the same time, what can reasonably be considered irrelevant to the decision problem). This notion of relevance has a direct bearing on the credibility of a model and on the interpretation of results generated using that model. Failure to account for the complexities of the decision problem may result in the development of models which are ‘‘mathematically sophisticated but contextually naıve’’ (Ackoff, 1979). The development of useful mathematical models therefore requires more than mathematical ability alone: first, it requires the model developer to understand the complexity of the real system that the model will attempt to represent, and the choices available for translating this understanding of complexity into a credible conceptual and mathematical structure. It is perhaps surprising that while much has been written about the technical aspects of model development, for example, the statistical extrapolation of censored data and methods for synthesizing evidence from multiple sources, there is a comparative dearth of practical guidance surrounding formal processes through which an appropriate model structure should be determined. It is this complex and messy subject matter that forms the focus of this article.

The purpose of this article is not to rigidly prescribe how model development decisions should be made, nor is it intended to represent a comprehensive guide of ‘how to model.’ The former would undoubtedly fail to reflect the unique characteristics of each individual decision problem and could discourage the development of new and innovative modeling methods. Conversely, the latter would inevitably fail to reflect the sheer breadth of decisions required during model development. Rather, the purposes of this article are threefold:

- To highlight that structural model development choices invariably exist;

- To suggest a generalizeable and practical hierarchical approach through which these alternative choices can be prospectively exposed, considered and assessed; and

- To highlight key issues and caveats associated with the use of certain types of evidence in informing the conceptual basis of the model.

The article is set out as follows. The article begins by introducing concepts surrounding the role and interpretation of mathematical models in general, and attempts to highlight the importance of conceptual modeling within the broader model development process. Following on from this, existing literature surrounding model structuring and conceptual modeling is briefly discussed. The article then moves on to suggest a practicable framework for understanding the nature of the decision problem to be addressed in order to move toward a credible and acceptable final mathematical model structure. A series of potentially useful considerations is presented to inform this process.

The Interpretation Of Mathematical Models

A mathematical model is a ‘‘representation of the real worldy characterized by the use of mathematics to represent the parts of the real world that are of interest and the relationships between those parts’’ (Eddy, 1985). The roles of mathematical modeling are numerous, including extending results from a single trial, combining multiple sources of evidence, translating from surrogate/intermediate endpoints to final outcomes, generalizing results from one context to another, informing research planning and design, and characterizing and representing decision uncertainty given existing information (Brennan and Akehurst, 2000). At a broad level, mathematical or simulation models in Health Technology Assessment (HTA) are generally used to simulate the natural history of a disease and the impact of particular health technologies on that natural history in order to estimate incremental costs, health outcomes, and cost-effectiveness.

All mathematical models require evidence to inform their parameters. Such evidence may include information concerning disease natural history or baseline risk of certain clinical events, epidemiology, resource use and service utilization, compliance/participation patterns, costs, health-related quality of life (HRQoL), survival and other time-to-event outcomes, relative treatment effects, and relationships between intermediate and final endpoints. However, the role of evidence is not restricted to informing model parameters. Rather, it is closely intertwined with questions about which model parameters should be considered relevant in the first place and how these parameters should be characterized. The consideration of how best to identify and use evidence to inform a particular model parameter thus first requires an explicit decision that the parameter in question is ‘relevant,’ the specification or definition of that parameter, and some judgment concerning its relationship to other ‘relevant’ parameters included in the model. This often complex and iterative activity is central to the process of model development and can be characterized as a series of decisions concerning (1) what should be included in the model, (2) what should be excluded, and (3) how those phenomena that are included should be conceptually and mathematically represented.

The need for these types of decisions during model development is unavoidable, rather it is a fundamental characteristic of the process itself. Although this activity already takes place in health economic model development, it is often unclear how this process has been undertaken and how this may have influenced the final implemented model. In practice, the reporting of model structures tends to be very limited (Cooper et al., 2005) and, if present, usually focuses only on the final model that has been implemented. In such instances, the reader may be left with little idea about whether or why the selected model structure should be considered credible, which evidence has been used to inform its structure, why certain abstractions, simplifications, and omissions have been made, why certain parameters were selected for inclusion (and why others have been excluded), and why the included parameters have been defined in a particular way. This lack of systematicity and transparency ultimately means that judgments concerning the credibility of the model in question may be difficult to make. To produce practically useful guidance concerning the use of evidence in models, it is first important to be clear about the interpretation of abstraction, bias, and credibility in the model development process.

Credibility Of Models

A model cannot include every possible relevant phenomenon; if it could it would no longer be a model but would instead be the real world. The value of simplification and abstraction within models is the ability to examine phenomena which are complex, unmanageable, or otherwise unobservable in the real world. As a direct consequence of this need for simplification, all models will be, to some degree, wrong. The key question is not whether the model is ‘correct’ but rather whether it can be considered to be useful for informing the decision problem at hand. This usefulness is directly dependent on the credibility of the model’s results, which is, in turn, hinged on the credibility of the model from which those results are drawn. Owing to the inevitability of simplification and abstraction within models, there is no single ‘perfect’ or ‘optimal’ model. There may, however, exist one or more ‘acceptable’ models; even what is perceived to be the ‘best’ model could always be subjected to some degree of incremental improvement (and indeed the nature of what constitutes an improvement requires some subjective judgment). The credibility of potentially acceptable models can be assessed and differing levels of confidence can be attributed to their results on the basis of such judgments. The level of confidence given to the credibility of a particular model may be determined retrospectively – through considerations of structural and methodological uncertainty ex post facto, or prospectively – through the a priori consideration of the process through which decisions are made concerning the conceptualization, structuring, and implementation of the model.

Defining Relevance In Models

The purpose of models is to represent reality, not to reproduce it. The process of model development involves efforts to reflect those parts of reality that are considered relevant to the decision problem. Judgments concerning relevance may differ between different modelers attempting to represent the same part of reality. The question of ‘what is relevant?’ to a particular decision problem should not be judged solely by the individual developing the model; rather making such decisions should be considered as a joint task between modelers, decision-makers, health professionals, and other stakeholders who impact on or are impacted on by the decision problem under consideration. Failure to reflect conflicting views between alternative stakeholders may lead to the development of models which represent a contextually naıve and uninformed basis for decision-making.

The Role Of Clinical/Expert Input

Clinical opinion is essential in understanding the relevant facets of the system in which the decision problem exists. This clinical opinion is not only relevant, but essential, because it is sourced from individuals who interact with this system in a way that a modeler cannot. This information forms the cornerstone of a model’s contextual relevance. However, it is important to recognize that health professionals cannot fully detach themselves from the system in which they practice; their views of a particular decision problem may be to some degree influenced by evidence they have consulted, their geographical location, local enthusiasms, their experience, and expertise, together with a wealth of other factors. Understanding why the views of stakeholders differ from one another is important, especially with respect to highlighting geographical variations. As such, the use of clinical input in informing models and model structures brings with it the potential for bias. Bias may also be sourced from the modeler themselves as a result of their expertise, their previous knowledge of the system in which the current decision problem, and the time and resource available for model development. Whenever possible, potential biases should be brought to light to inform judgments about a model’s credibility.

Problem Structuring In Health Economics And Other Fields

It is important at this stage to note that although related to one another, there is a distinction between problem structuring methods (PSMs) and methods for structuring models. The former are concerned with understanding the nature and scope of the problem to be addressed, eliciting different stakeholders’ potentially conflicting views of the problem and developing consensus, exploring what potential options for improvement might be available, and even considering whether a problem exists at all. There exist a number of methods to support this activity which have emerged from the field of ‘soft’ Operational Research; these include Strategic Options Design and Analysis (SODA) and cognitive mapping, Soft Systems Methodology (SSM), Strategic Choice Approach, and Drama Theory to name but a few. All stakeholders are seen as active ‘problem owners’ and each of their views are considered important. The emphasis of PSMs is not to identify the ‘rationally optimal’ solution, but rather to lay out the differing perceptions of the problem owners to foster discussion concerning potential options for improvement to the system. The value or adequacy of the PSMs is gauged according to whether they usefully prompt debate, with the intended endpoint being some agreement about the structure of the problem to be addressed and the identification and agreement of potential improvements to that problem situation. They do not necessarily assume that a mathematical model is appropriate or required. These methods are not discussed further here, but the interested reader is directed to the excellent introductory text by Rosenhead and Mingers, 2004.

Conversely, formal methods for model structuring, which relates principally to developing a conceptual basis for the quantitative model, remain comparatively underdeveloped, both in the context of health economic evaluation as well as in other fields. A recent review of existing conceptual modeling literature (Robinson, 2008) concluded that although conceptual modeling is ‘probably the most important element of a simulation study,’ there remains for the most part, a vacuum of research in terms of what conceptual modeling is, why it should be done, and how it may be most effectively implemented. Where formal conceptual modeling viewpoints have emerged, there is little consensus or consistency concerning how this activity should be approached.

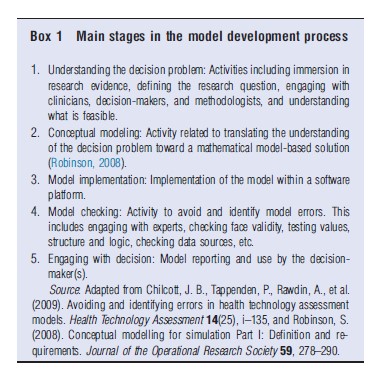

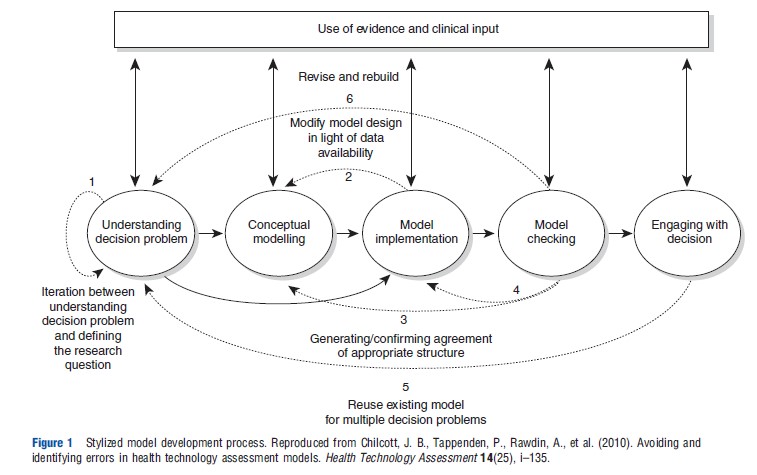

This problem is particularly applicable in the field of health economics. Recently, a qualitative research study was undertaken to examine techniques and procedures for the avoidance and identification of errors in HTA models (Chilcott et al., 2010). Interviewees included modelers working within Assessment Groups involved in supporting NICE’s Technology Appraisal Program as well as those working for outcomes research groups involved in preparing submissions to NICE on behalf of pharmaceutical companies. A central aspect of these interviews involved the elicitation of a personal interpretation of how each interviewee develops models. These descriptions were synthesized to produce a stylized model development process comprising five broad bundles of activities (Box 1 and Figure 1).

One particular area of variability between interviewees concerned their approaches to conceptual model development. During the interviews, respondents discussed the use of several approaches to conceptual modeling including documenting proposed model structures, developing mock-up models in Microsoft Excel, developing sketches of potential structures, and producing written interpretations of evidence. For several respondents, the model development process did not involve any explicit conceptual modeling activity; in these instances, the conceptual model and implementation model were developed in parallel with no discernable separation between the two activities. This is an important distinction to make with respect to model credibility and validation (as discussed in Section Definition and Purpose of Conceptual Modeling) and the processes through which evidence is identified and used to inform the final implemented model.

Definition And Purpose Of Conceptual Modeling

Although others have recognized the importance of conceptual modeling as a central element of the model development process, it has been noted that this aspect of model development is probably the most difficult to undertake and least well understood (Chilcott et al., 2010; Law, 1991). Part of the problem stems from inconsistencies in the definition and the role(s) of conceptual modeling, and more general disagreements concerning how such activity should be used to support and inform implementation modeling. The definition and characteristics of conceptual modeling are dependent on the perceived purposes of the activity. For the purpose of this document, conceptual modeling is taken as: ‘‘the abstraction and representation of complex phenomena of interest in some readily expressible form, such that individual stakeholders’ understanding of the parts of the actual system, and the mathematical representation of that system, may be shared, questioned, tested, and ultimately agreed.’’

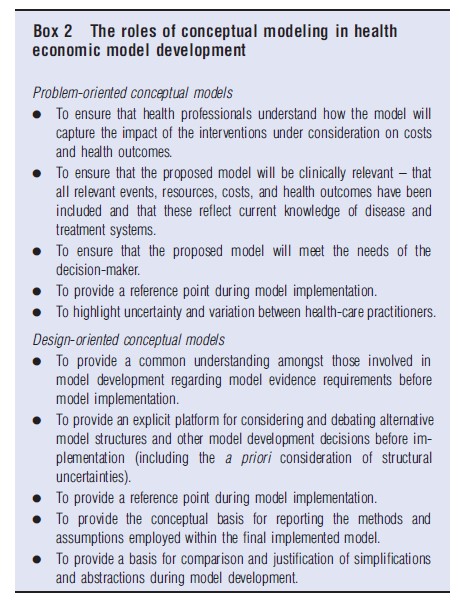

Although there is inevitable overlap associated with processes for understanding the decision problem to be addressed, conceptual modeling is distinguishable from these activities in that it is targeted at producing tangible outputs in the form of one or more conceptual models. In the context of health economic evaluation, conceptual model development may be used to achieve a number of ends, as highlighted in Box 2. Broadly speaking, these roles fall into two groups: (1) those associated with developing, sharing, and testing one’s understanding of the decision problem and the system in which this exists and (2) those associated with designing, specifying, and justifying the model and its structure. Therefore it seems sensible to distinguish between problemoriented conceptual models and design-oriented conceptual models; this distinction has been made elsewhere outside of the field of health economics (Lacy et al., 2001). The characteristics of these alternative types of conceptual model are briefly detailed below. Both of these types of model may be useful approaches for informing the relevant characteristics of a health economic model.

Problem-oriented conceptual models: This form of conceptual model is developed to understand the decision problem and the system in which that problem exists. The focus of this model form concerns fostering communication and understanding between those parties involved in informing, developing, and using the model. In health economic evaluation, this type of conceptual model is primarily concerned with developing and agreeing a description of the disease and treatment systems: (1) to describe the current clinical understanding of the relevant characteristics of the disease process(es) under consideration and important events therein; and (2) to describe the clinical pathways through which patients with the disease(s) are detected, diagnosed, treated, and followed-up. This type of conceptual model is therefore solely concerned with unearthing the complexity of the decision problem and the system in which it exists; its role is not to make assertions about how those relevant aspects of the system should be mathematically represented. The definition of ‘what is relevant?’ for this type of conceptual model is thus primarily dependent on expert input rather than the availability of empirical research evidence. In this sense, this type of conceptual model is a problem-led method of inquiry.

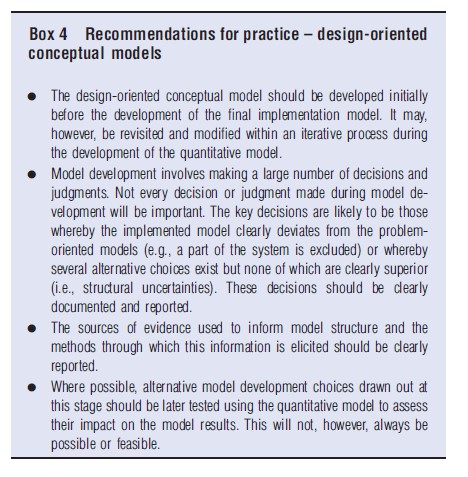

Design-oriented conceptual models: This form of conceptual model is focused on the consideration of alternative potentially acceptable and feasible quantitative model designs, to specify the model’s anticipated evidence requirements, and to provide a basis for comparison and justification against the final implemented model. To achieve these ends, it draws together the problem-oriented conceptual views of relevant disease and treatment processes and interactions between the two. The design-oriented conceptual model sets out a clear boundary around the model system, defines its breadth (how far down the model will simulate certain pathways for particular patients and subgroups) and sets out the level of depth or detail within each part of the model. It therefore represents a platform for identifying and thinking through potentially feasible and credible model development choices before actual implementation. Within this context, the definition of ‘what is relevant?’ is guided by the problem-oriented models and therefore remains problem-led, but is mediated by the question of ‘what is feasible?’ given the availability of existing evidence and model development resources (available time, money, expertise, etc.).

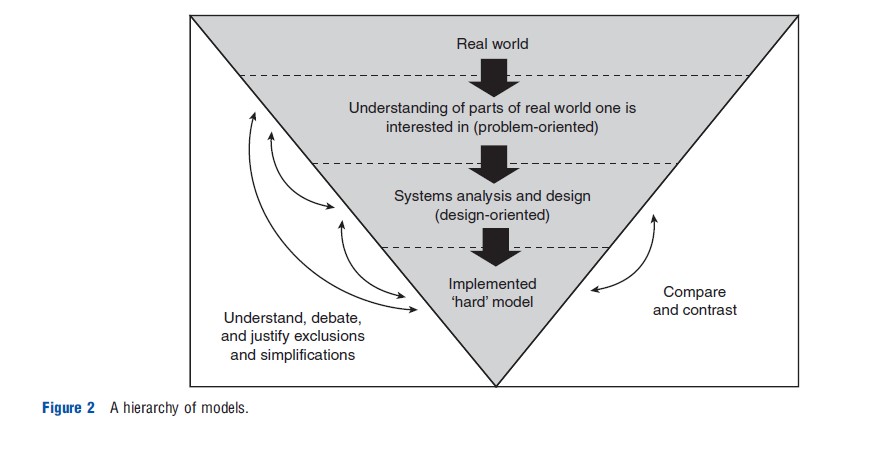

Conceptual modeling activity, however defined, is directly related to model credibility and validation (Sargent, 2004). The absence of an explicit conceptual model means that a specific point of model validation is lost. As a model cannot include everything, an implemented model is inevitably a subset of the system described by the conceptual model. This hierarchical separation allows simplifications and abstractions represented in the implemented model to be compared against its conceptual counterpart, thereby allowing for debate and justification (Robinson, 2008). However, in order to make such comparisons, conceptual model development must be overt: the absence or incomplete specification of a conceptual model leads to the breakdown of concepts of model validation and verification. Without first identifying and considering the alternative choices available, it is impossible to justify the appropriateness of any particular model. Furthermore, without first setting out what is known about the relevant disease and treatment processes, the extent or impact of particular assumptions and simplifications cannot be drawn out explicitly. Therefore, the benefit of separating out conceptual modeling activity into distinct problem-oriented and design-oriented components is that this allows the modeler (and other stakeholders) to first understand the complexities of the system the model intends to represent, and then to examine the extent to which the simplifications and abstractions resulting from alternative ‘hard’ model structures will deviate from this initial view of the system. Figure 2 shows the hierarchical relationship between the real world, the problem and design-oriented conceptual models, and the final implemented model.

Practical Approaches To Conceptual Modeling In HTA

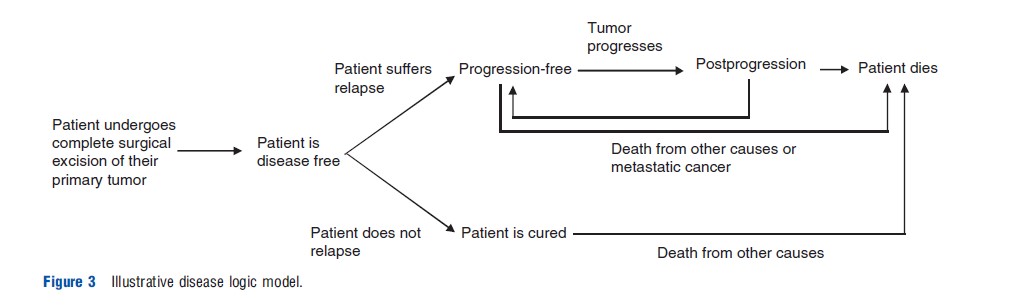

This section suggests how conceptual modeling could be undertaken and which elements of model development activity should be reported. Practical considerations surrounding conceptual model development are detailed below with reference to a purposefully simple model to assess the cost-effectiveness of adjuvant treatments for a hypothetical cancer area. These considerations are intended to be broadly generalizeable to economic analysis within other diseases and conditions. It should be noted that the illustrative model is only intended to suggest how the alternative conceptual models forms may be presented and used. The problem-oriented model is divided into two separate conceptual model views: a disease logic model and a service pathways model.

Problem-Oriented Conceptual Modeling – Disease Logic Models

Figure 3 presents a simple example of a conceptual disease logic model for the hypothetical decision problem. The focus of this type of model is principally on relevant disease events and processes rather than on the treatments received. At each point in the pathway, the focus should therefore relate to an individual patient’s true underlying state rather than what is known by health-care professionals at a particular point in time. It should be reiterated that this type of conceptual model does not impose or imply any particular decision concerning modeling methodology or appropriate outcome measures; it is solely a means of describing the relevant clinical events and processes within the system of interest. It should also be noted that such conceptual models should be accompanied by textual descriptions to support their interpretation and to capture and factors or complexities which are not represented diagrammatically.

The following nonexhaustive set of issues and considerations may be useful when developing and reporting this type of problem-oriented conceptual model:

Inclusion/Exclusion Of Disease-Related Events

- What are the main relevant events from a clinical/patient perspective? Does the conceptual model include explicit reference to all clinically meaningful events? For example, could a patient experience local relapse? Or could the intervention affect other diseases (e.g., late secondary malignancy resulting from radiation therapy used to treat the primary tumor)?

- Can these relevant events be discretized into a series of mutually exclusive biologically plausible health states? Does this make the process easier to explain?

- If so, which metric would be clinically meaningful or most clinically appropriate? Which discrete states would be clinically meaningful? How do clinicians think about the disease process? How do patients progress between these states or sequences of events?

- If not, how could the patient’s preclinical trajectory be defined?

- Do alternative staging classifications exist, and if so can/ should they be presented simultaneously?

- Are all relevant competing risks (e.g., relapse or death) considered?

- For models of screening or diagnostic interventions, should the same metric used to describe preclinical and post-diagnostic disease states?

- Is the breadth of the conceptual model complete? Does the model represent all relevant states or possible sequences of events over the relevant patient subgroup’s lifetime?

- What are the causes of death? When can a patient die from these particular causes? Can patients be cured? If so, when might this happen and for which states does this apply? What is the prognosis for individuals who are cured?

Impact Of The Disease On HRQoL And Other Outcomes

- Is there a relationship between states, events, and HRQoL? Which events are expected to impact on a patient’s HRQoL?

- Does the description of the disease process capture separate states in which a patient’s HRQoL is likely to be different?

- Does the description of the disease process capture different states for prognosis?

Representation Of Different-Risk Subgroups

- Is it clear which competing events are relevant for particular subgroups?

- Does the description of the disease process represent a single patient group or should it discriminate between different subgroups of patients?

- Are these states/events likely to differ by patient subgroup?

Impact Of The Technology On The Conceptualized Disease Process

- Have all competing technologies relevant to the decision problem been identified?

- Can the conceptual model be used to explain the impact(s) of the technology or technologies under assessment? Do all technologies under consideration impact on the same set of outcomes in the same way?

- Are there competing theories concerning the impact(s) of the technology on the disease process? Can these be explained using the conceptual model?

- Does the use of the health technology result in any other impacts on health outcomes that cannot be explained using the conceptual disease logic model?

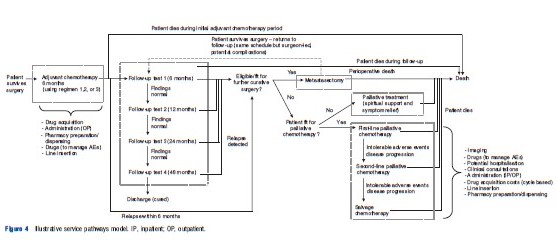

Problem-Oriented Conceptual Modeling – Service Pathways Models

Figure 4 presents an illustrative service pathways model for the hypothetical decision problem. In contrast to the disease logic model, the focus of the service pathways model is principally concerned with the health-care interventions received based on what is known or believed by health-care practitioners at any given point in time. Again, such conceptual models should be accompanied by textual descriptions to ensure clarity in their interpretation and to retain any complexity which is not or cannot be captured diagrammatically.

The following issues and considerations may be useful when developing and reporting this type of conceptual model:

Relationship Between Risk Factors, Prognosis, And Service Pathways

- Is it clear where and how patients enter the service? Is it clear where patients leave the service (either through discharge or death)?

- Does the model make clear which patients follow particular routes through the service?

- Are there any service changes occurring upstream in the disease service which may influence the case-mix of patients at the point of model entry? For example, if surgical techniques were subject to quality improvement might this change patient prognosis further downstream in the pathway?

- Does the model highlight the potential adverse events resulting from the use of particular interventions throughout the pathway? What are these? Do they apply to all competing technologies under consideration?

- Are there any potential feedback loops within the system (e.g., resection→follow-up→relapse→re-resection→follow-up)?

- Which patients receive active treatment and which receive supportive care alone? What information is used to determine this clinical decision (e.g., fitness, patient choice)?

Distinction Between What Is True And What Is Known

- How does the pathway change on detection of the relevant clinical events, as defined in the conceptual disease logic model? For example, at what point may relapse be detected?

- Is the occurrence of certain events likely to be subject to interval censoring?

Geographical Variations

- How do the service pathways represented in the model vary by geographical location or local enthusiasms? What are these differences and which parts of the pathway are likely to be affected most?

Nature Of Resource Use

- What are the relevant resource components across the pathway and what is the nature of resource use at each point of intervention? For example, routine follow-up dependent on relapse status, once-only surgery (except for certain relapsing patients), cycle-based chemotherapy, doses dependent on certain characteristics, dose-limited radiation treatment, etc.

- Does the conceptual service pathways model include all relevant resource components?

- Which resources are expected to be the key drivers of costs?

Impact Of The Technology On The Service Pathway

- Which elements of the conceptual model will the intervention under assessment impact on? For example, different costs of adjuvant treatment, different mean time in follow-up, different numbers of patients experiencing metastatic relapse? What are expected to be the key drivers of costs?

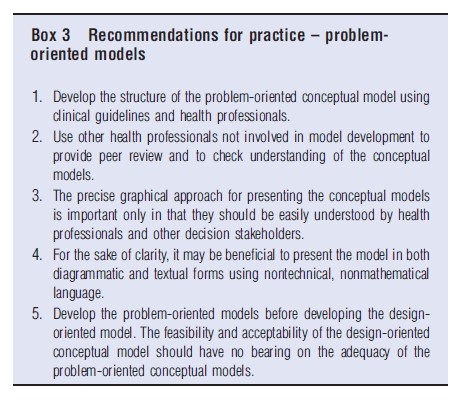

Box 3 presents recommendations for developing and reporting problem-oriented conceptual models.

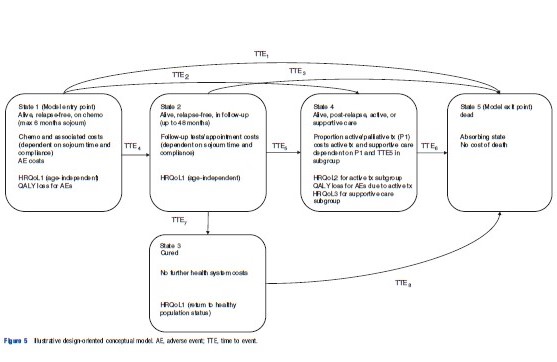

Practical Considerations – Design-Oriented Conceptual Models

Figure 5 presents an example of a design-oriented conceptual model for the hypothetical decision problem (again, note that this is not intended to represent the ‘ideal’ model but merely illustrates the general approach). This type of model draws together the problem-oriented model views with the intention of providing a platform for considering and agreeing structural model development decisions. By following this general conceptual approach it should be possible to identify the anticipated evidence requirements for the model at an early stage in model development.

Anticipated evidence requirements to populate the proposed illustrative model are likely to include the following types of information:

- Time-to-event data to describe sojourn time/event rates and competing risks in States 1–4 for the current standard treatment.

- Relative effect estimates for the intervention(s) versus comparator (e.g., hazard ratios or independent hazards time-to-event data).

- Information relating to survival following cure.

- HRQoL utilities for cancer and cured states.

- Estimates of QALY losses or utility decrements and duration data for adverse events.

- Information concerning the probability that a relapsed patient undergoes active/palliative treatment.

- Survival and other time-to-event outcomes for relapsed patients.

- Resource use and costs associated with:

- Chemotherapy (drug acquisition, administration, pharmacy/dispensing, drugs to manage adverse events, line insertion);

- Resource use and unit costs for follow-up;

- Supportive care following relapse; and

- Active treatments following relapse.

It may be helpful to consider the following issues when developing design-oriented conceptual models.

Anticipated Evidence Requirements

- What clinical evidence is likely to be available through which to simulate the impact of the new intervention(s)? How should these parameters be defined and what alternatives are available? Should independent or proportional hazards be assumed?

- Are all relevant interventions and comparators compared within the same trial? If not, is it possible for outcomes from multiple trials to be synthesized? How will this be done?

- What evidence is required to characterize adverse events within the model? What choices are available?

- Beyond the baseline and comparative effectiveness data relating to the technology itself, what other outcomes data will be required to populate the downstream portions of the model (e.g., progression-free survival and overall survival by treatment type for relapsed patients, survival duration in cured patients)?

- Will any intermediate–final relationships be modeled? What external evidence is there to support such relation-ships? What are the uncertainties associated with this approach and how might these be reflected in the model?

- Which descriptions of HRQoL states are possible and how will these parameters be incorporated into the final model?

- Will all model parameters be directly informed by evidence or will calibration methods (e.g., Markov Chain Monte Carlo) be required? Which calibration methods will be used and why should these be considered optimal or appropriate?

- What premodel analysis will be required to populate the model? Which parameters are likely to require this?

Modeling Clinical Outcomes

- Which outcomes are needed by the decision-maker and how will they be estimated by the model?

- How/should trial evidence be extrapolated over time?

- If final outcomes are not reported within the trials, what evidence is available concerning the relationship between intermediate and final outcomes? How might this information be used to inform the analysis of available evidence?

- How will the impact(s) of treatment be simulated? How will this directly/indirectly influence costs and health out-comes? What alternative choices are available?

Modeling Approach

Figure 5 Illustrative design-oriented conceptual model. AE, adverse event; TTE, time to event.

- Which methodological approach (e.g., state transition, patient-level simulation) is likely to be most appropriate? Why?

- Is the proposed modeling approach feasible given available resources for model development?

- How does the approach influence the way in which certain parameters are defined? What alternatives are available (e.g., time-to-event rates or probabilities)?

- Does the proposed modeling approach influence the level of depth possible within certain parts of the model?

Adherence To A Health Economic Reference Case

- Will the proposed model meet the criteria of the reference case specific to the decision-making jurisdiction in which the model will be used? If not, why should the anticipated deviations be considered appropriate?

Simplifications And Abstractions

- Have any relevant events, costs or outcomes been purposefully omitted from the proposed model structure? Why? For what reason(s) may these omissions be considered appropriate?

- Are there any parts of the disease or treatment pathways that have been excluded altogether? Why?

- What is the expected impact of such exclusion/simplification decisions? Why?

- What are the key structural simplifications? How does the design-oriented model structure differ from the problem-oriented conceptual models? Why should these deviations be considered appropriate or necessary? What is the expected direction and impact of these exclusions on the model results?

Box 4 presents recommendations for developing and reporting design-oriented conceptual models.

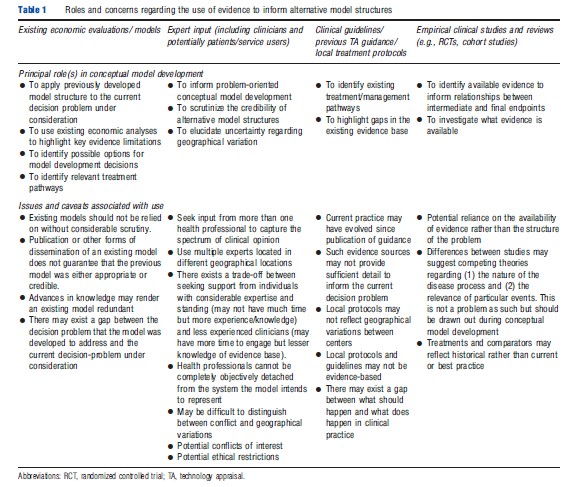

Evidence Sources To Inform Conceptual Models

A number of potential evidence sources may be useful for informing these types of conceptual model. Although the evidence requirements for any model will inevitably be broader than that for traditional systematic reviews of clinical effectiveness, the task of obtaining such evidence should remain a systematic, reproducible process of inquiry. Possible sources of evidence to inform conceptual models include: (1) clinical input; (2) existing systematic reviews; (3) clinical guidelines; (4) existing efficacy studies; (5) existing economic evaluations or models; and (6) routine monitoring sources. Table 1 sets out some pragmatic concerns which should be borne in mind when using these evidence sources to inform conceptual model development.

References:

- Ackoff, R. L. (1979). The future of operational research is past. Journal of the Operational Research Society 30, 93–104.

- Brennan, A. and Akehurst, R. (2000). Modelling in health economic evaluation: What is its place? What is its value? Pharmacoeconomics 17(5), 445–459.

- Briggs, A., Claxton, K. and Sculpher, M. (2006). Decision modelling for health economic evaluation. New York: Oxford University Press.

- Chilcott, J. B., Tappenden, P., Rawdin, A., et al. (2010). Avoiding and identifying errors in health technology assessment models. Health Technology Assessment 14(25), i–135.

- Cooper, N. J., Coyle, D., Abrams, K. R., Mugford, M. and Sutton, A. J. (2005). Use of evidence in decision models: An appraisal of health technology assessments in the UK to date. Journal of Health Services Research and Policy 10(4), 245–250.

- Eddy, D. M. (1985). Technology assessment: The role of mathematical modelling. Assessing medical technology. Washington DC: National Academy Press.

- Lacy, L., Randolph, W., Harris, B., et al. (2001). Developing a consensus perspective on conceptual models for simulation systems. Proceedings of the 2001 Spring Simulation Interoperability Workshop.

- Law, A. M. (1991). Simulation model’s level of detail determines effectiveness. Industrial Engineering 23(10), 16–18.

- Robinson, S. (2008). Conceptual modelling for simulation Part I: Definition and requirements. Journal of the Operational Research Society 59, 278–290.

- Rosenhead, J. and Mingers, J. (2004). Rational analysis for a problematic world revisited: Problem structuring methods for complexity, uncertainty and conflict (2nd ed). England: Wiley.

- Sargent, R. G. (2004). Validation and verification of simulation models. Proceedings of the 2004 Winter Simulation Conference. Available at: https://www.informs-sim.org/wsc04papers/004.pdf.