Usually, great achievements and breakthroughs in most aspects of medical care, such as procedures in diagnostics and therapy, are highly esteemed over extended periods of time, because the disease for which the procedures were introduced is still prevalent. In epidemiology, this is not necessarily the case. Here, successes, often followed by equally successful achievements in preventive medicine, may permanently eliminate the medical problem that was solved by epidemiological research, thereby relegating successes in epidemiology and preventive medicine to oblivion in the shadows of the history of medicine. Thus, the history of medicine may play a greater role in epidemiology than in most other medical disciplines.

This applies particularly to teaching. In brief, the medical student faces two essentially different didactic approaches in his or her curriculum. The first approach relates to the individual-oriented challenges represented by diagnostics and therapeutics. The second approach relates to group-oriented challenges that involve acquisition of information about health problems in the community, their causes, as well as their prevention. In medical teaching, the case history represents an invaluable didactic element, and case histories are easily available from individual- oriented clinical practice. However, successful efforts against group-oriented community challenges will inevitably transfer victory to the history of medicine with the inherent danger of being considered less relevant to current challenges or even of being forgotten; the problem is resolved and no longer represents an imminent health risk. The eradication of smallpox, which was achieved by 1977 when the last case occurred is a case in point. This leaves the history of epidemiology with an important objective related to teaching in addition to the significance of the topic per se.

Admittedly, the subject is vast; this article intends to identify patterns in terms of eras or paradigms as well as time trends and illustrate them by providing relevant examples rather than accounting for the complete history. Contemporary perinatal, cardiovascular, and cancer epidemiology are discussed in separate articles, as will important topics related to intrauterine programming life-course and genetic epidemiology.

Definition of Epidemiology

In A Dictionary of Epidemiology, epidemiology is defined as ‘‘the study of the distribution and determinants of health-related states or events in specified populations and the application of this study to control of health problems’’ (Last, 2001: 62).

More operationally, epidemiology can be defined as the methodology to obtain information on the distribution in the community of diseases and their determinants, e.g., according to characteristics of time, place, gender, age, and often occupation. In perinatal epidemiology, maternal age, birth order, gestational age, and birth weight are often added. The objective is to utilize this information in the search for cause(s) or the etiology of a disease or another medical outcome such as a complication, in order to prevent an adverse outcome. Thus, epidemiology may also be defined as the medical branch aiming at clarification of causes of diseases.

When does, according to this definition, the history of epidemiology actually start? When was it, for the first time, evident that the cause of a disease was of any concern? And when was the search initiated for the first time for necessary information to clarify such a cause?

Obviously, these questions are difficult to answer, but ancient sources may contribute to their elucidation. Before attempting to address the issue any further, the concept of causation and the knowledge and insights necessary to clarify the etiology of a disease should be discussed.

In brief, a factor is causal when its manipulation is followed by corresponding changes in the outcome. In a further elaboration of the issue, one often introduces the terms necessary and sufficient causes. In the first category, when the cause is necessary and sufficient, the element of randomness is nil. This is a rare situation: Measles virus in an unimmunized individual is often used as an example. In the second category, when the cause is necessary, but not sufficient, cofactors play a decisive role, e.g., in leprosy Mycobacterium leprae is a necessary, but not sufficient factor; poor nutrition would be needed as a cofactor. In the third category, when the cause is sufficient, but not necessary, we have multifactorial diseases, e.g., lung cancer, being caused by cigarette smoking or radon. This may be an oversimplification; in most cases cofactors would also be necessary, which brings us to the fourth category in which a factor is neither necessary nor sufficient, e.g., the large number of medical outcomes in which the etiology represents an interaction, often between genetic and environmental factors, which may be considered webs of causation.

In short, our objective is to demonstrate how the concepts of risk factors, epidemiology, and preventive medicine have evolved throughout history and to illustrate the consequences. When looking into the historical roots of epidemiology, it would be useful to contrast as a narrative thread the pattern observed in medical activities related to the individual, i.e., the patient, and activities related to the group, i.e., the community. The latter are the activities relevant to epidemiology.

Epidemiology in the Bible

Even today, it is held that knowledge of risk factors might affect the integrity, the independence, and the well-being of humans. This attitude relates to postmodernistic values in which the roles of the community in general and in particular in public health are questioned (Mackenbach, 2005). A fear of knowledge of causes of disease afflicts the basic objectives of epidemiology and needs further attention. Such thoughts are not truly novel; they may even be identified in perhaps the most important tome of Western civilization, the Bible. In Genesis, God said to Adam: ‘‘of the tree of knowledge of good and evil, thou shalt not eat for the day that thou eatest thereof, thou shalt surely die.’’

Figure 1. Temptation and Fall, Michelangelo

This statement and the subsequent eating of the fruit, after the act of the serpent, have called for a multitude of incompatible explanatory attempts (Michelsen, 1972). Nevertheless, the general interpretation of the legend implies that acquisition of knowledge per se is a mortal sin; this interpretation may be influential and widespread even today (Figure 1). Causes of unpleasant events such as disasters, catastrophes, and diseases are still often attributed to fate or God’s will. On the other hand, there is also a prevalent attitude today that disease is, to a great extent, a consequence of stochastic processes with a considerable element of randomness.

Very few causal factors are sufficient (and necessary). Consequently, it is held, medical efforts should no longer focus on causes or prevention, but rather on helping the patient to cope with the situation, i.e., empower the patient. Admittedly, the role of random events definitely exists; for example, if you walk down the street and are killed by a falling roof tile, you are the victim of a process with a considerable element of randomness. Paradoxically, both a belief in fate or God’s will and a belief in random events marginalize the roles of risk factors in general as well as those of epidemiology and preventive medicine in particular.

Still, there is strong evidence of the beneficial aspects of the risk factor concept and approach. If you put your baby to sleep in a face-down position, the child’s risk of dying from sudden infant death syndrome increases 13-fold (Carpenter et al., 2004). The child is definitely a victim of a risk factor, and the campaign advocating the supine sleeping position, the so-called back to sleep idea, has no doubt saved many infants from dying.

Figure 2. The Pest in Azoth; Nicolas Poussin

So far, we have only discussed the historical roots of negative attitudes to the search for causes of disease. Further examples exist in the Bible. Around 1000 BC, King David made a census of his people, admittedly not to survey their living conditions and thereby potential determinants of disease, but probably for military aims. The Lord informed David that he had sinned and punished the people with a terrible plague. The census is referred to as one of the great sins of the Old Testament (Hauge, 1973), actually without specifying why; military activities are otherwise not condemned in the Old Testament. However, the event had great impact on subsequent generations, as illustrated in a painting from the seventeenth century (Figure 2), and the acquisition of this type of data may have long been considered at least controversial. Thus, William the Conqueror’s remarkable survey of England as of 1086, published in the Doomsday Book, was carried out against great popular resentment and was not repeated in Britain or abroad for a long time.

Ancient Roots of Epidemiology

Figure 3. Hippocrates

Where do we find the earliest roots of epidemiology? Clinical patient-oriented medicine, with thorough diagnostics and effective treatment as the paramount objective, does not include prevention among its aims; admittedly, the patient is already ill. In fact, using the term patient in our context is controversial and distracts from the aims of epidemiology and prevention; the term should be replaced by population or community, terms that are much less concrete, at least to the physician. Thus, in ancient times, epidemiology and prevention were the physician’s annex activities. This is amply illustrated by one of the first individual representatives of medicine; Hippocrates (460–377 BC) (Figure 3). He founded a school to which a series of medical texts is attributed: Corpus Hippocraticum (Aggebo, 1964). The authors were not only interested in clinical problems, but also in associations between health and air, water, food, dwellings, and living conditions. Recommendations to avoid diseases were issued both to the individual and the community. In particular, the importance of good drinking water and clean processing of food was emphasized. Prudence with respect to eating and drinking, bathing, and physical exercise was most important to preserve one’s health and develop a fit body.

These ideas were not restricted to individual Greek physicians. Similar concepts were found in ancient India where civil servants were appointed to be responsible for the water supply and food safety. The Babylonians and Assyrians had complex systems of water supply and sewage 3000–4000 years BC. Similar systems were established in ancient Egypt where such hygienic functions were closely attached to religion, e.g., in terms of which animals were allowed or forbidden to eat (Gotfredsen, 1964).

The strict hygiene rules detailed in the Old Testament’s Books of Moses may have been influenced by Egypt; Moses lived many years in Egypt among the Jewish people. Admittedly, many of the regulations in the Books of Moses seem to reflect knowledge of risk factors and, through the wide distribution of the Bible, enormously influenced subsequent generations and their concept of risk factors and prevention up to recent time.

Figure 4. Outfall of Cloaca Maxima

Still, a new concept was introduced by Hippocrates: Disease is the result of natural causes and not a consequence of infringing against God(s). These ideas, later detailed by the Hippocratic school, were further developed in subsequent generations, also in terms of practical measures. Thus, Plato (427–347BC) suggested that civil health inspectors should be appointed for streets and roads, dwellings, and the water supply (Natvig, 1964). The ideas were applied most particularly by Roman civilization (Gotfredsen, 1964). Already by 450BC, the Romans enforced practical regulations for food safety, water supply, and dead bodies. Between 300 and 100 BC, nine large aqueducts were constructed, bringing water to the city of Rome estimated at an enormous 1800 l per inhabitant per day (today’s supply amounts to 300–400 l per day in most cities) (Natvig, 1964). At the same time, a complete sewage system was developed, Cloaca Maxima, which was in use until recently (Figure 4).

It is not certain that these elaborate hygienic systems of Antiquity were solely implemented to prevent disease. The physicians’ role in their development was remarkably limited. The motivation for the systems was probably more religious in the early cultures, while the Romans were perhaps more oriented toward comfort. Furthermore, the great Roman physician, Galen (131–202 AD), seemed to be interested in nutrition and physical fitness out of clinical motivation much more than from a community health perspective (Gotfredsen, 1964).

This does not mean that physicians of Antiquity lacked interest in the causes of disease; however, their interest seemed to be more theoretical. Elaborate pathogenetic systems were established in which the four liquids – blood, yellow bile, black bile, and mucus, the four cardinal humors of the body – formed the basis of the so-called humoral pathology. Also, etiological considerations were made on causes of disease, comprising the violation of good habits with respect to food and alcohol consumption, rest and work, as well as sexual excesses. In addition, epidemic diseases were attributed to risk factors, miasmas, present in the air and exhaled from an unhealthy underground. These pathogenetic and etiological theories, first established by the Greeks and later developed by the Romans, particularly Galen, survived, even without empirical evidence, until the nineteenth century (Sigerist, 1956).

Several factors that may have been responsible for the slow progress of medicine in general and epidemiology in particular have been suggested. One is that the fall of the Roman Empire caused discontinuity in the medical sciences. The Northern peoples lacked the medical traditions developed through the centuries in Greece and Rome. Another is the new power, the Christian church, which lacked the incentive to ask questions about determinants of disease and preventive measures in the community. Even with the Biblical theses from the Books of Moses, probably aiming at prevention, the Christian spirit was dedicated to caring for the sick patient rather than protecting the community against disease. Thus, clinical functions developed in several monasteries where monks as well as nuns often organized hospital services. Furthermore, the Christian ideal, concentrating on spiritual well-being and often denying or neglecting the human body, might actually represent a threat to healthy behavior (Sigerist, 1956; Gotfredsen, 1964; Natvig, 1964).

During this period of public health deterioration, a lighthouse remained in the medieval darkness: The medical school of Salerno south of Naples in Italy, established in the ninth century (Natvig, 1964). During the twelfth and thirteenth centuries, this school developed into a center of European medicine. It became a model for subsequent schools of medicine set up within the universities to be founded in Europe. But even in Salerno, the main focus was the clinical patient. Nevertheless, a health poem written around 1100 aims at beneficial behavior in order to remain in good health, but was addressed to the individual rather than the community (Gotfredsen, 1964). Consequently, the important systems for food safety, drinking water, and sewage set up in Antiquity were neglected, and the Middle Ages were scourged by a series of epidemics that did not come to an end until the nineteenth century.

The Fight against the Great Plagues

Epidemiology in the modern sense – clarifying the cause of a disease aiming at prevention – barely existed until 1500. Medieval public health-relevant services were probably set up for comfort rather than for disease control. If a medical interest existed in healthy behavior, it was motivated more by need for individual counseling than for public mass campaigns. Efforts aiming at establishing associations between risk factors and disease are difficult to ascertain, in part because of the notion that disease, as any other mishap, was determined by fate or God(s).

A few important exceptions to this broad statement must be mentioned. Among the epidemics pestering the medieval communities, the bubonic plague was by far the most important. Apparently, bubonic plague had existed at the time of Hippocrates and Galen and is even mentioned in Papyrus Ebers around 1500 BC. Epidemics of bubonic plague were frequent in Europe from the seventh to the thirteenth century (Gotfredsen, 1964).

The Black Death around the middle of the fourteenth century, however, surpassed any previous epidemic in terms of mortality and case fatality. Of a population in Europe of 100 million, more than 25 million died (Gotfredsen, 1964). Even if the plague was considered to be a punishment from God, fear of contagion was widespread, and the idea existed that isolation might be beneficial. In some cases, attempts were made to isolate a city, but with no convincing effect; the city might already have been infected or isolation was impossible to obtain since the germ was spread also by birds. Still, during subsequent plague epidemics in the fourteenth century, rational and effective measures were taken. For example, in 1374, the city council of Ragusa (Dubrovnik), Croatia, ordered all suspect vessels to be kept in isolation for 30 days. In 1383, a similar regulation was introduced in Marseille, now amounting to 40 days of isolation. Thereby the concept of quarantine was introduced, which came to influence the fight against infectious diseases far into the twentieth century.

Isolation was also effective against another killer of the Middle Ages, more silent and less dramatic, but still highly influential in people’s minds: leprosy. Leprosy has been known in Europe since the fifth century when the first leprosy hospitals were built in France. Knowledge of the infectiousness of the disease was established, and isolation of the infected patients was attempted, for example by regulations in Lyon (583) and in northern Italy (644) (Gotfredsen, 1964). In the wake of the crusades, the incidence of leprosy increased considerably, and leprosy hospitals were set up in most cities, almost always outside the city walls. Usually, leprosy patients were forced to live in these institutions with very limited rights to move outside. On the continent and in Britain, leprosy was more or less eradicated by the end of the sixteenth century, presumably because of strict rules aiming at isolation of infectious cases. A new wave of leprosy culminated in Norway around 1850, and an important factor in the subsequent eradication of the disease by 1920 was isolation, as documented by the National Leprosy Registry of Norway (Irgens, 1973).

The Search for the Cause of Syphilis

Figure 5. Girolamo Fracastoro

The Renaissance introduced new ideas that brought medicine far beyond what was achieved in Antiquity. Girolamo Fracastoro (1483–1553) of Padua, Italy (Figure 5), has been considered the great epidemiologist of the Renaissance. In 1546, he published a booklet on infectious diseases, including smallpox, measles, leprosy, tuberculosis, rabies, plague, typhus, and syphilis. Fracastoro suggested the existence of germs that could be disseminated from person to person, through clothes and through the air (Gotfredsen, 1964).

No doubt syphilis was at this time a novelty, the reason why the new ideas were developed and caught attention. Even if it has been claimed that syphilis existed in Europe before 1500, it is generally agreed that Columbus brought the disease back from his discovery of America in 1492. Fracastoro suggested that most patients got the disease from being infected through sexual intercourse, even if he had seen small children who were infected by their wet-nurse. The spread of the disease from 1495, when besieging soldiers outside Naples were infected, was most remarkable and corresponded to where the troops went after the campaign. Already the same year, the disease became well known in France, Switzerland, and Germany and in 1496 in the Netherlands and Greece. During the following years, syphilis was introduced in most European countries, to a great extent following soldiers, who went where battles might be fought.

Interestingly, the disease was named after the country in which it supposedly originated: In Italy it was called the Spanish disease, in France the Italian disease, in Britain the French disease, in the Nordic countries the German disease, etc. Fracastoro coined the term syphilis when in 1530 he published the poem Syphilis or the French illness, in which the hero Syphilis is a shepherd who convinces the people of the benefits of betraying the God of the Sun. Consequently, the God punishes the people with a terrible epidemic disease, named after the perpetrator. The poem may be considered an elegant example of the importance in research of convincing people of the validity of one’s findings, a function one might denote scientific rhetoric. The poem was used to call attention to urgent scientific results.

Figure 6. John Hunter

Venereal diseases such as syphilis set the stage for a remarkable event in the history of medicine, which truly illustrates the search for etiology as well as the concept of experimentation. John Hunter (1728–93) (Figure 6), a Scottish surgeon, considered the most influential in scientific surgery in his century, was intrigued by the question of whether syphilis and gonorrhea were the same disease with a variety of symptoms dependent on a series of cofactors. Hunter presented this hypothesis in A Treatise on the Venereal Disease in 1786, in which he provided evidence supporting this assertion derived from an experiment conducted on himself. He inoculated his own penis with pus taken from a gonorrhea patient and eventually developed typical syphilis. Apparently, his patient had a mixed infection with both gonorrhea and syphilis.

The publication and the findings of this experiment caused heated debate on whether experiments like this were ethically acceptable. Further experiments in Edinburgh published in 1793 concluded that inoculation from a chancre (i.e., syphilis) produces a chancre and inoculation from gonorrhea produces gonorrhea (Gotfredsen, 1964).

Smallpox: Pitfalls and Victory

Figure 7. Lady Mary Wortley Montagu

Smallpox (variola) remained one of the scourges of ancient time. It had been observed that a person very rarely was affected by smallpox twice. This had led to the so-called variolation by which pus from a case was inoculated into the skin of another person, a practice developed in the Orient, spreading to Europe in the eighteenth century. Lady Mary Wortley Montagu (Figure 7), the wife of the British ambassador to Turkey, was instrumental in the introduction of the variolation to Europe; another example of rhetoric related to research, even if the research part in this case was rather remote. In 1717, Lady Montagu wrote a letter to smallpoxinfested Britain, saying that no one died of smallpox in Turkey, and she had her son inoculated accordingly. Back in London, she started a campaign to introduce variolation in Britain (Gotfredsen, 1964; Natvig, 1964). Voices raised against the practice, in part since the method actually contributed to the spread of the disease (inoculated persons might cause disease in noninoculated persons), in part since some of the inoculated persons died from smallpox. Thus, toward the end of the century, variolation had shown no breakthrough.

Figure 8. Jenner vaccinating a boy

Nevertheless, it may have paved the way for the vaccination against smallpox that was introduced by Edward Jenner (1749–1823) (Henderson, 1980). Jenner (Figure 8) was a student of John Hunter and worked as a general practitioner in Berkeley, England. A smallpox-like disease in cattle was prevalent in his region, and he observed that, to a large extent, children who were variolated had no clinical symptoms after the variolation. People thought this was because previous exposure to cattle-pox disease was protective. In 1796, Jenner conducted an experiment in which he vaccinated a boy with pus from a milkmaid’s hand. Later, Jenner put the boy into bed with smallpox-infected children and also variolated him. The boy did not get smallpox and had no symptoms after variolation. Jenner interpreted this as a protective effect of the inoculation and tried to publish his findings, but the article was rejected for lack of firm evidence. Two years later, Jenner published his treatise An Inquiry into the Causes and Effects of the Variolae Vaccinae in which he accounted for another seven children vaccinated and a further 16 individuals with previous cattle-pox disease, all with no symptoms after variolation by Jenner (Gotfredsen, 1964). His conclusion was that the inoculation with cattle-pox pus, i.e., vaccination, provided the same protection as variolation, and that the new method, contrary to variolation, did not involve any risk of infecting other people. Furthermore, the method was seemingly harmless to the persons vaccinated (Henderson, 1980).

As compared to the syphilis experiments, this elegant trial was ethically far less controversial even if the question was raised as to whether such an inoculation with an animal disease might cause an unknown number of new diseases in humans, a pertinent question at that time and even today in other contexts. Still, in a very short time, Jenner’s conclusions received broad support. The concerns appeared far less important than feared, and in 1802, an institution for vaccination was established in Britain, in 1808 organized as The National Vaccine Establishment. An international vaccination movement was established, promoting this protective measure on a large scale and as a mass campaign. Already in 1810, vaccination was made compulsory by legislation in Denmark and Norway (Gotfredsen, 1964). In addition, the introduction of vaccination indicates the importance in science of convincing people of the validity of one’s findings, virtually a prerequisite of success in epidemiology and public health.

The Epidemiological Avenue

Before further exploring the history of epidemiology, an overview of the analytic methods aiming at establishing an association between a factor and a medical outcome should be made (Rothman and Greenland, 1998). The basic principle in epidemiology is that individuals exposed to a risk factor should have a higher occurrence of a disease than nonexposed individuals. Such an association between a risk factor and a disease can be established by different methods, which have been referred to as the epidemiological avenue.

First comes the case study, which does not at all take the nonexposed into consideration and for that reason is not included in the avenue by many epidemiologists. Today, the results of a case study will never be published, but still a case study will often be the first observation that sheds light on a problem on which a hypothesis is formulated. A case study will be particularly relevant if exposure and outcome are rare or new. In such a situation, the outcome may be considered not to occur in the unexposed. A case study will be followed by a subsequent study along the epidemiological avenue, often a case–control study. The history of epidemiology offers many examples of interesting case studies, often reported by clinicians who have observed a particular risk factor in a rare disease. Thus, the British surgeon Percival Pott (1714–88) found that almost all of his patients with the very rare scrotal cancer were or had been chimney sweeps (Gotfredsen, 1964). He concluded that chimney sweeps were exposed to some etiological agent in their work, in which they often had to scrub the chimneys by passing their own bodies through the narrow flues. More recently, the association between phocomelia, a very rare birth defect, and the drug thalidomide, was ascertained by clinicians who observed that in these cases, all mothers had taken thalidomide during the first trimester (Warkany, 1971).

Next on the avenue is the ecological design that characterizes a group of individuals with respect to exposure rather than the individual per se. Typically, the group may represent a nation and the exposure may, for example, be average consumption of salt, while mortality due to stroke may represent the outcome. If a number of countries are included, it becomes apparent that countries with a low intake of salt have a low stroke mortality and vice versa, suggesting a cause–effect association. Misinterpretation of such observations is the background for the term ecological fallacy. Still, a missing association at a group level is a serious argument against an association observed at an individual level being interpreted as causal.

Further down the avenue are the survey, the case– control study, and the cohort study, which are all based on data characterizing the individual rather than the group. In a survey, all individuals of a population are characterized at the same time with respect to exposure and outcome, while in a cohort study all individuals are initially characterized with respect to exposure and are followed up subsequently with respect to outcome. In the more parsimonious but still powerful case–control study, cases are selected among sick individuals and controls among healthy individuals who are then studied with respect to previous exposure.

Finally, there is the experiment, in epidemiology often called a randomized controlled trial, in which a more or less homogeneous population is randomized into groups exposed to various protective agents and compared to a nonexposed group, which may be given a placebo. The roots of this method are found in a rudimentary form in the Old Testament where King David ordered some of his servants to eat of the king’s food, a procedure reiterated throughout history and even today when the host tastes his wine before serving his guests.

Evidence-Based Epidemiology

Methods and concepts developed for epidemiological aims may be relevant in a clinical setting as well, and particularly concepts related to experiments. During the first half of the nineteenth century, one finds the first roots of evidence-based medicine. Pierre Charles Alexandre Louis (1787–1872) at the Charite hospital in Paris called attention to the need for more exact knowledge in therapy. He questioned the benefits of venesection in different diseases and particularly pneumonia. In 1835, he published his treatise Recherches sur les effects de la saignee. Venesection was the standard treatment in pneumonia, and for ethical reasons he was unable to undertake an experiment in which a group of patients was not given this treatment. However, he categorized his pneumonia patients according to the time when they had their venesection. Among those treated during the first 4 days, 40% died versus 25% among those treated later (Gotfredsen, 1964). Even if these data may be subject to selection bias, the more serious cases having an initial treatment, the results raised skepticism against venesection as treatment of pneumonia.

Louis was also interested in the causes of disease and conducted studies for example on tuberculosis, in which he obtained data questioning the role of heredity in this disease and the benefits of a mild climate. In his conclusion he used terms such as ‘‘the exact amount of hereditary influence’’ that may lead our thoughts to the concept of attributable risk, and one of his students, W. A. Guy (1810–85), conceptualized what we now refer to as the odds ratio (Lilienfeld and Lilienfeld, 1980).

Louis’s work also paved the way for the development of medical statistics in general, which was strongly boosted by Jules Gavarret (1809–90), who in Principes generaux de statistique medicale in 1840 concluded that medical theories should not be based on opinion, but rather on evidence that could be measured (Gotfredsen, 1964).

The contributions by Louis and Gavarret may be considered consequences of a public health movement that developed in France during the first half of the nineteenth century. Even if the roots of epidemiology, as we have seen, are traceable back to Hippocrates, the growth of modern epidemiological concepts and methods took place in the postrevolutionary Parisian school of medicine. The French Revolution may have eliminated many medical beliefs and broadened the recruitment to the medical profession, which was called upon when Napoleon Bonaparte (1769–1821) reorganized France. However, the era of the French public health movement came to an end toward the middle of the nineteenth century, possibly due to the lack of public health data; France did not develop a vital statistics system (Hilts, 1980; Lilienfeld and Lilienfeld, 1980).

Figure 9. William Farr

In England, epidemiology flourished because of the presence of such a system, which to a large extent was attributable to William Farr (1807–83) (Figure 9), a physician who has been considered the father of medical statistics (Eyler, 1980). As chief statistician of England’s General Register Office, which he commanded over a period of 40 years, Farr became a man of influence and also a prominent member of what may be called a statistical movement of the 1830s, itself a product of the broader reform movement (Hilts, 1980). The members of the statistical movement saw in the use of social facts a valuable weapon for the reform cause. They considered statistics a social rather than a mathematical science. It has been said that few members of this movement mastered more mathematics than rudimentary arithmetic.

The hospital as the arena of observation and evidence-based medicine was explicitly highlighted by Benjamin Phillips (1805–61), who in 1837, after years of experience as a doctor at Marylebone Infirmary, read a paper before the Royal Medical and Clinical Society referred to in the Journal of the Statistics Society of London, 1838. The editor claimed that the article had ‘‘. . . great interest, because so few attempts have been made in this country to apply the statistical method of enquiry to the science of surgery. The object of the enquiry was to discover whether the opinion commonly entertained with respect to the mortality succeeding amputation is correct. . ..’’ And Phillips wrote:

In the outset, I am bound to express my regret, that the riches of our great hospitals are rendered so little available for enquiries like the present, that these noble institutions, which should be storehouses of exact observation, made on a large scale, and from which accurate ideas should be disseminated throughout the land, are almost completely without the means of fulfilling this very important object. (Phillips, 1838: 103–105)

Figure 10. Ignaz Philipp Semelweiss

These critical statements were representative of the statistical movement. Most general in nature, the ambitions were amply illustrated by the achievements of Ignaz Philipp Semelweiss (1818–65) (Figure 10), with an influence far beyond clinical epidemiology. During the first half of the nineteenth century, puerperal fever caused serious epidemics at the large European maternity hospitals in which large proportions of all women giving birth died. The general opinion was that the contagion was miasmatic, related to atmospheric or telluric conditions. Consequently, the institutions were often moved to other locations, but with no beneficial effect (Gotfredsen, 1964).

In 1847, Semmelweis, a Hungarian gynecologist working in Vienna, made an observation that led him to the etiology of puerperal fever (Loudon, 2000; Lund, 2006). A pathologist at the hospital died from septicemia after being injured during an autopsy, with symptoms and findings identical to those observed in puerperal fever. Thus, Semmelweis hypothesized that puerperal fever was caused by doctors and medical students involved both in autopsies and obstetrics, bringing a germ from one activity to the other. He found that the rate of lethal puerperal fever was 114 per 1000 births at the ward attended by medical students versus 27 per 1000 at the ward attended by students of midwifery, who did not participate in autopsies. After a campaign, instructing everyone attending a birth to wash their hands in chloride of lime, the rate declined to 13 per 1000 in both departments. Semmelweis’s findings experienced the consequences of a lack of a convincing presentation. Rather the opposite happened: In a discrediting confusing presentation, he made a number of enemies by accusing colleagues of indolence and negligence. Still, he had conducted an elegant experiment with convincing results, shedding light on the etiology of an important disease long before the dawn of the bacteriological era.

Cholera, Right or Wrong

To many epidemiologists, John Snow’s fight against cholera represents the foundation of modern epidemiology (Sigerist, 1956; Natvig, 1964). Cholera is assumed to have been endemic in India in historic time, but was unknown in Europe until the nineteenth century when Europe was hit by a series of pandemics. The first started around 1830, causing an estimated 1.5 million deaths (Gotfredsen, 1964). To the population in Europe, this first wave of cholera demonstrated that the disease represented a most serious threat to the community. However, knowledge of the etiology of the disease was scarce. Obviously, cholera was an epidemic disease, but whether it spread from person to person or was a result of miasma diffusing up from a contaminated ground, the ancient etiological concept, was uncertain. An apparent failure of quarantine to constitute an effective measure against the spread of the disease suggested the latter etiological mechanism.

Figure 11. John Snow

Thus, when the second pandemic hit, lasting from 1846 to 1861, no effective preventive measure existed. Europe remained unprepared, and the challenge to epidemiology and public health was enormous. In 1848, John Snow (1813–58) (Figure 11) was working as a general practitioner in London when an epidemic of cholera occurred among his patients. In a publication of 1849, Snow presented his observations of the distribution of the disease according to time, place, age, gender, and occupation. He concluded that the agent causing cholera entered the human body by eating or drinking, that the agent was present in the stools of the patients and that the disease was propagated by sewage containing the agent that contaminated the drinking water. To the extent that this publication received any attention, its conclusions were rejected.

However, during the summer of 1854, a new epidemic hit London, and now Snow achieved important practical results when he managed to close down the public well in Broad Street. Snow had observed that a frequent finding among his patients was the use of water from this pump and that people in the same area who did not get cholera, did not take water from the well. Here, Snow more or less laid down the principles of the case–control study. However, even if the pump handle of this well was removed and the local epidemic came to an end, the health authorities and the local medical establishment were not convinced; Snow’s hypothesis, that an infectious agent in the drinking water represented the etiological mechanism, was not easy to accept.

Snow was not defeated, nor was the cholera. New local epidemics occurred. Encouraged by reform-minded people such as William Farr, Snow wanted to test his drinking water hypothesis. The water supply system in London at that time offered the possibility to conduct a most elegant study. Two waterworks, Lambeth & Co. and Southwark & Vauxhall, provided drinking water to large areas of London, and both companies were within the same districts. The former took water from farther up the river than the latter, which consequently had a much poorer quality and even a high sodium content. Snow hypothesized that the occurrence of cholera in the population receiving poor-quality drinking water would be much higher, and he was right: Mortality due to cholera was 14 times higher.

Here, Snow had conducted an elegant cohort study in which the population was grouped according to exposure. Due to the situation with two waterworks delivering water in the same districts, one might assume that the only relevant difference between the two groups would be the source of water supply, thus eliminating possible confounding factors. In a critical perspective, this might not necessarily be true; the best drinking water might have been more expensive, introducing a socioeconomic confounder, but the socioeconomic gradient in cholera was allegedly low. Perhaps more important in those days was that the poorer drinking water was presumably delivered to the lower floors, which would be compatible with the alternative hypothesis of miasmas from the ground water. Still, Snow concluded that drinking water was the source, and he added that one must not believe that simply unclean water was sufficient; only when the specific etiological agent finds its way into the water is the disease disseminated. Snow died in 1858 without having been recognized as the scientist who had clarified the etiology of cholera, but his work had great impact on the subsequent development of the London water supply and in the rest of the world.

Figure 12. Max von Pettenkofer

On the continent, epidemiological research on cholera followed another path. Max von Pettenkofer (1818–1901) (Figure 12) was in many ways Snow’s extreme opposite, being a member of the German medical establishment. As professor of hygiene, his assistance was requested by the authorities in organizing the fight against cholera when Munich was hit by an epidemic in 1854. His conclusions, even if they were later proved to be wrong, were recognized by his contemporaries and gave him widespread respect. Nevertheless, Pettenkofer was a meticulous observer and collected epidemiologically relevant data in much the same way as Snow. He found that houses in which many cases occurred and particularly the initial cases, were located at a lower altitude than houses without cholera cases. After some time, the disease also spread to other houses. Furthermore, uncleanliness was an important factor, but this factor was also present in non-cholera houses. Consequently, Pettenkofer inferred that cholera was caused by contaminated ground water in which the agent was derived from human stools and subsequently transferred to a gas fuming into the dwellings above; in principle not far from the miasma theory of Antiquity. Pettenkofer even claimed that houses built on rock would never be affected.

Finally, in 1892, Pettenkofer’s fallacy was dramatically demonstrated in his remarkable experiment on himself (Gotfredsen, 1964). After Robert Koch (1843–1910) had discovered the cholera bacillus in 1883, many years after Snow’s and Pettenkofer’s initial research, Pettenkofer felt that his ground water theory was losing support. Thus, when an epidemic hit Hamburg in that year, Pettenkofer obtained a culture of the bacillus and on 7 October drank 1 ml of it. He did not get ill, only a slight diarrhea a few days afterward. On 17 October, his assistant did the same experiment on himself and he got typical rice-water-like stools. They both had bacilli in their stools for several weeks. Even now, Pettenkofer was not convinced. He was not impressed by his own symptoms and still held that the ground water theory was correct. His opponents attributed his lack of symptoms to the epidemic of 1854, when Pettenkofer also had contracted the disease and presumably received lasting immunity.

Nevertheless, Pettenkofer’s efforts to establish adequate sewage systems and to drain the surroundings were instrumental in the fight not only against cholera, but also typhoid and dysentery. His research led to impressive achievements in public health and sanitation, not only in Germany, but throughout Europe and the rest of the world. Pettenkofer’s work again demonstrates both the importance, but also the potential perils of rhetoric in the dissemination of scientific results and how rhetoric may compensate for lacking validity of the conclusions. Snow was right and for a long time forgotten, while Pettenkofer was wrong and for a long time celebrated.

Furthermore, the work of both these scientists represents what has later derogatorily been referred to as black box epidemiology: Observing effects and trying to attribute them to causal factors without being able to account for the intermediate mechanisms. In short, Snow’s work demonstrates the usefulness of black box epidemiology; an effective fight against cholera was possible without having seen the bacillus. On the other hand, Pettenkofer’s inferences from much the same observations illustrate the potential pitfalls involved. Knowledge of details relating to the pathway between the factor and the outcome is evidence that an association is causal. Nevertheless, the principle of not publishing anything until all intermediate mechanisms are clarified in every detail is hardly sustainable.

The Public Health Perspective

Cholera was spectacular. The disease was new and involved serious consequences, not only medically, but also in the community at large in terms of loss of productivity: Cholera was a major and evident challenge to the nation. Far less attention was paid to diseases and epidemics in general and the lack of hygiene.

Figure 13. Edwin Chadwick

In Britain, Edwin Chadwick (1800–90) (Figure 13) in 1838 started a crusade in which he highlighted these problems and their inherent consequences to the community (Natvig, 1964). As the secretary to a poor-law commission, he gave an influential report on the miserable housing conditions in London’s East End. Consequently, Chadwick was asked by the Parliament to conduct a similar survey throughout the country, which was published as Report on the Sanitary Conditions of the Labouring Population of Great Britain in 1842.

Statistics on outcomes such as morbidity and mortality as well as risk factors, e.g., housing conditions, unemployment, and poverty in general, produced compelling evidence explaining the high morbidity and mortality rates. This statistical evidence also paved the way for adopting special health legislation, the first of its kind, and for establishing the first board of health, in London in 1848. Chadwick, together with William Farr, were the chief architects of the British Health Administration built up during these years.

In the United States, similar pioneering work was conducted by Lemual Shattuck (1793–1859), who was appointed chairman in a commission reporting on the hygienic conditions in Massachusetts and giving recommendations for future improvements. Shattuck also provided statistical data on the most important causes of death and their distribution according to season, place, age, sex, and occupation. The commission stated that the mean age at death might be considerably increased, that annually, thousands of lost lives could have been saved and that ten thousands of cases of disease could have been prevented, that these preventable burdens cost enormous amounts of money, that prevention would have been much better than cure and, most importantly, that means were available to prevent these burdens (Natvig, 1964). Contrary to Chadwick, Shattuck did not succeed with his campaign in his lifetime, but the next generations have seen the importance of his work.

Registry-Based Epidemiology

Until the middle of the nineteenth century, the data necessary to clarify etiological issues had been scarce. Initially, the nature of the data needed was not clear; even the school of Hippocrates, which considered disease to be the result of natural causes, did not start systematic data collection on a large scale. In the Middle Ages, the challenges related to epidemics were overwhelming, precluding any efforts to collect data. The substantial progress in the fight against smallpox was based on a low number of experiments, and the elucidation of the epidemiology of cholera comprised relatively small-scale ad hoc studies. The large hospitals that saw the studies of puerperal fever and pneumonia were, in their clinical records, managers of enormous amounts of data, as pointed out by interested doctors who realized their assets, although it was kept in a form that prohibited use in research. As pointed out by Florence Nightingale, the data did exist but were inaccessible.

Figure 14. Ove Guldberg Hoegh

Thus, in 1856 a new paradigm was introduced when the first national registry for any disease was established: the National Leprosy Registry of Norway. Prophetically, its initiator, Ove Guldberg Hoegh (1814–62) (Figure 14), stated that it was by means of this registry that the etiology of leprosy would be clarified. At that time, the prevailing opinion among the medical establishment was that the disease was hereditary; it seemed to run in families. Another group claimed that leprosy was caused by harsh living conditions in general, while quite a few considered leprosy to be a contagious or infections disease spreading from one person to another. Thus, there was an urge for clarification of the etiology of the disease.

Still, other motivations may have been more important for the establishment of the registry. A need was expressed from a humanitarian point of view to provide care for patients. Consequently, one had to know the patients and their whereabouts. Furthermore, there was a need for health surveillance to obtain reliable information on the occurrence of the disease, whether it was increasing or propagating to parts of the country in which it had not been observed previously (Irgens, 1973).

Figure 15. Gerhard Henrik Armauer Hansen

Already in one of his first annual reports, Hoegh presented evidence from the Leprosy Registry of an association between isolation of cases in terms of admission to leprosy hospitals and the subsequent occurrence of the disease. On this basis, he inferred that leprosy was an infectious disease. In 1874, when Gerhard Henrik Armauer Hansen (1841–1912) (Figure 15) published his detection of the leprosy bacillus, he first accounted for an elaborate epidemiological analysis along the same lines as Hoegh’s in which he confirmed the association between isolation and subsequent fall in incidence. Next, he described the bacillus which, he claimed, represented the etiological agent in leprosy.

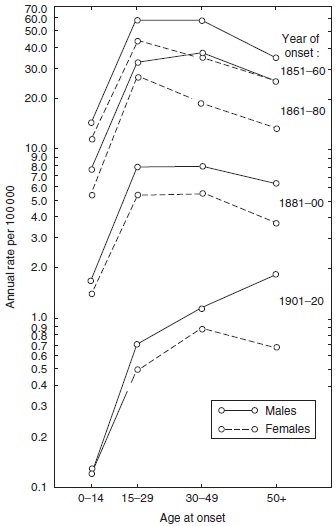

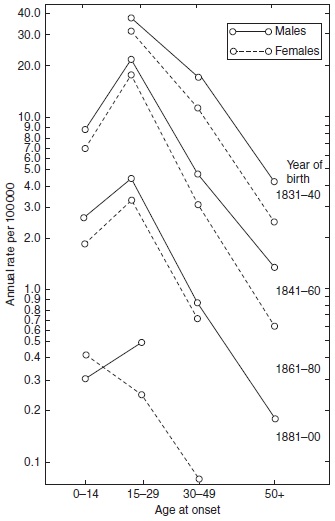

Altogether, 8231 patients were recorded with primary registration data and with follow-up data, e.g., on hospital admissions, until death. In the 1970s, the Leprosy Registry was computerized and utilized in epidemiological studies of the disease (Irgens, 1980), showing that the mean age at onset increased steadily during the period when leprosy was decreasing (Figure 16), an observation useful in leprosy control in countries where a national registry does not exist. A similar pattern is to be expected whenever the incidence of a disease with a long and varying incubation period decreases over an extended period of time.

Figure 16. Age- and sex-specific incidence rates of leprosy by year of onset in Norway 1851–1920. Reproduced from Irgens LM (1980) Leprosy in Norway. An epidemiological study based on a national patient registry. Leprosy Review 51 (supplement 1): 1–130.

Later, new challenges such as tuberculosis and cancer paved the way for the establishment of similar registries in many other countries. The final step on this registry path was the establishment of medical birth registries comprising the total population, irrespective of any disease or medical problem. The first, the Medical Birth Registry of Norway, has kept records from 1967. The medical birth registries were set up in the wake of the thalidomide tragedy in the late 1950s to prevent similar catastrophes in the future. In other countries, permanent case–control studies were set up with similar aims, cooperating in the International Clearinghouse for Birth Defects Surveillance and Research and EUROCAT (European Surveillance of Congenital Anomalies).

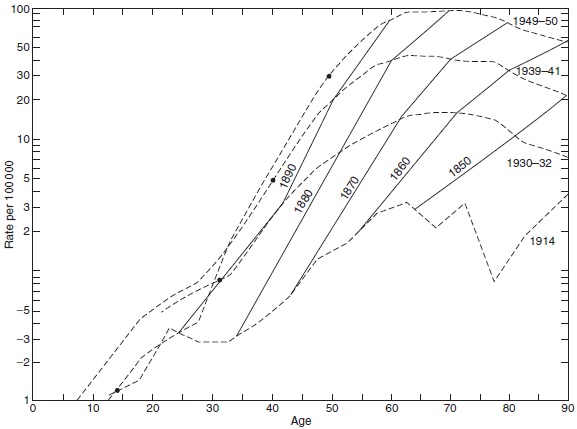

These medical registries, with long time series of observations, provide unique analytical opportunities, particularly in the field of cohort analysis. In 1875, Wilhelm Lexis (1837–1914), in Strasbourg, Alsace (France), set up the theoretical statistical framework for following individuals from birth onward. The question often being addressed was whether an effect seemingly attributed to age is truly the result of age or rather the effect of year of birth, i.e., a birth cohort effect. For example, in conventional cross-sectional analyses, performed in the 1950s, the mortality of lung cancer peaked around 65 years of age, an observation leading to speculations that most individuals who are susceptible to lung cancer would have had their onset of the disease at this age and older individuals would be resistant. However, when analyzed according to year of birth, the mortality increased continuously by age in all birth cohorts (Figure 17). Still, due to the increasing prevalence of smoking, the incidence increased from one cohort to the next, causing the artifactual age peaks that represented a cohort phenomenon (Dorn and Cutler, 1959).

Figure 17. Age-specificmortality rate fromlung cancer by year of death (broken lines) and year of birth (solid lines). Source: Dorn HF and Cutler SJ, (1959) Morbidity from cancer in the United States. Public Health Monographs No. 56.Washington, DC: US Govt Printing Office.

Figure 17. Age-specificmortality rate fromlung cancer by year of death (broken lines) and year of birth (solid lines). Source: Dorn HF and Cutler SJ, (1959) Morbidity from cancer in the United States. Public Health Monographs No. 56.Washington, DC: US Govt Printing Office.

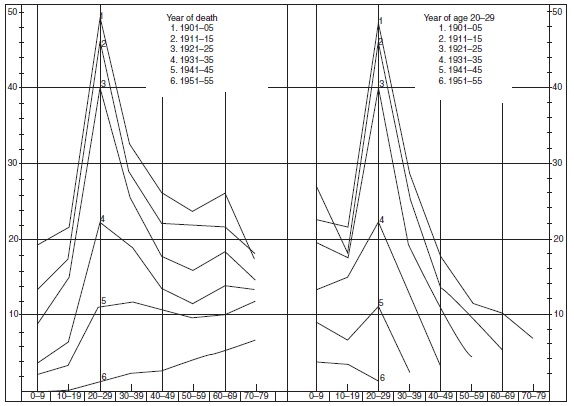

In infectious diseases with long incubation periods such as tuberculosis and leprosy, a similar cohort phenomenon has been observed. In both diseases, it appeared in cross-sectional analyses that during decreasing rates, the mean age at onset increased by year of onset. The inference was drawn that when the occurrence is low, the time necessary to be infected and to develop the disease would be longer than when the occurrence is high. This may in some cases be true, but does not account for the increasing age at onset. In analyses by year of birth, it has appeared that from one birth cohort to the next, the age of the peak occurrence did not increase. Consequently, the pattern observed in the cross-sectional analyses represented a cohort phenomenon (Figures 16, 18 and 19).

Figure 18. Age and sex-specific incidence rates of leprosy by year of birth in Norway 1851–1920. Reproduced from Irgens LM (1980) Leprosy in Norway. An epidemiological study based on a national patient registry. Leprosy Review 51 (supplement 1): 1–130. With permission from Leprosy Review.

The detection of such cohort phenomena requires long series of observations, which in practice are available only in registries. The enormous amounts of data in the registries, covering large numbers of individuals over extended periods of time, combined with the analytical power offered by the computer-based information technologies, have caused epidemiologists to talk about megaepidemiology, and registry-based epidemiology will no doubt play an important role in future epidemiological research.

Causality in the Era of Microbiology

Armauer Hansen’s detection of M. leprae in 1873 was a pioneering achievement, not only in leprosy but in infectious diseases in general. As we have already seen, the concept that some diseases are contagious, spreading from one individual to the next, had existed since Antiquity or before. However, not until 1863, when Casimir Davaine (1812–82) saw the anthrax bacillus and demonstrated that the disease could be reproduced in animals after inoculation of blood from infected animals, were the general etiological principles of infectious diseases clarified. Anthrax is an acute disease that was generally considered infectious. Leprosy is a chronic illness, and in the middle of the nineteenth century, the confusion was great as to its etiology. Even if a microorganism had been observed in a disease, it was not necessarily the cause of the disease, but possibly a consequence or even a random observation. Jacob Henle (1809–85) had enlarged on these problems already in 1840, and prerequisites necessary to establish a microorganism as the cause of a disease were later known as Koch’s postulates (Gotfredsen, 1964). These prerequisites are:

- The microorganism should be found in every case of the disease.

- Experimental reproduction of the disease should be achieved by inoculation of the microorganism taken from an individual suffering from the disease.

- The microorganism should not be found in cases of other diseases.

Paradoxically, Armauer Hansen was not able to meet any of these prerequisites, but still M. leprae eventually was accepted as the cause of leprosy; already in 1877 a bill aiming at preventing the spread of leprosy by isolating the infectious patients was enacted (Irgens, 1973).

Figure 19. Age-specific mortality rates of tuberculosis in Norway by year of death (left panel) and year of age 20–29 (right panel). Source: Central Bureau of Statistics Norway (1961) Trend of Mortality and Causes of Death in Norway 1856–1955. Oslo, Norway: Central Bureau of Statistics Norway.

In their considerations as to the etiological role played by microorganisms, Henle and Koch touched on general problems related to the association between a potential factor and a medical outcome; whether the association is causal or not. In 1965, A. Bradford Hill (1897–1991) published a comprehensive list of prerequisites of a causal association known as Hill’s criteria:

- The association is consistently replicated in different settings and by different methods.

- The strength of the association in terms of relative risk is sufficient.

- The factor causes only one specific effect.

- A dose–response relationship is observed between the factor and the outcome.

- The factor always precedes the outcome.

- The association is biologically plausible.

- The association coheres with existing theory and knowledge.

- The occurrence of the medical outcome can be reduced by manipulating the factor, e.g., in an experiment.

The importance of these criteria differs and the criterion of specificity, stating that a factor causes only one specific effect is not valid: Cigarette smoking causes a variety of adverse outcomes. Nor is specificity in the opposite direction valid: Lung cancer may be caused by a variety of factors. The discussion of such criteria and their shortcomings has continued since the middle of the nineteenth century, and taking the complexity of causal inference into consideration, the progress in microbiological research until the turn of the nineteenth century was amazing.

On the other hand, the conditions necessary to undertake this research had existed for almost two centuries, and one may ask why this progress did not occur earlier. The concept of infectious agents, e.g., in the air or spreading from person to person, had been accepted since Antiquity, and around 1675, Anthony van Leeuwenhoek (1632–1723) constructed the microscope. In 1683, he even described various types of microorganisms, apparently cocci and rods, which he referred to as small animals. Still, the implications of his observations were not recognized. Again lack of scientific rhetoric to convince himself and others of the significance of his findings seems to have been the case. Furthermore, it has been speculated that the spirit of the eighteenth century, attempting to organize assumed knowledge into systems, in medicine originating with Galen and Hippocrates, detrimental to new empirical evidence-based knowledge, was a major obstacle to further progress (Sigerist, 1956).

Two hundred years later, the time was ripe. In 1882, 9 years after Armauer Hansen’s discovery of M. leprae, Koch detected Mycobacterium tuberculosis, cultivated it, and produced disease after inoculation in research animals, as he had done for anthrax in 1876, and did for cholera in 1883. Over the next 30 years, Koch’s discoveries were followed by similar achievements by which a large number of microorganisms were discovered.

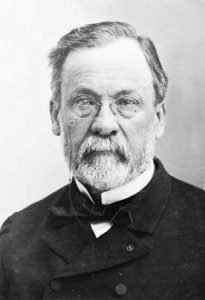

Figure 20. Louis Pasteur

During the same period, the concept of vaccination, introduced a century earlier, was further advanced by Louis Pasteur (1822–95) (Figure 20). Pasteur was also involved in anthrax research, and in 1881 he inoculated anthrax in cattle both protected and unprotected by a vaccine; none of the vaccinated cattle became infected versus all of the nonvaccinated animals, of which a large fraction died (Gotfredsen, 1964). Thus the concept of vaccination was introduced into the fight against diseases other than smallpox, but the term relating to where the vaccine originated (vacca = cow) was retained.

Pasteur’s efforts to achieve protection against infectious diseases by inoculation of antigens producing antibodies against infectious agents were further expanded by himself and several successors. Interestingly, Pasteur’s achievements obtaining top publicity was not the vaccinations to protect the community, but a vaccine produced for clinical purposes, i.e., his vaccine against rabies. Pasteur had succeeded in attenuating the infectious agent in this disease, later identified as a virus, which was inoculated in individuals who had been bitten by rabid dogs. The popular press at the time abounded with reports on how 19 bear hunters, bit by a rabid wolf, were sent on the Trans-Siberian Express to Paris to be inoculated by Pasteur. The inoculation should be made as soon as possible after the bite, but although the journey took 15 days, only three persons (15.8%) died (Gotfredsen, 1964); untreated, the case fatality rate ranges from 60 to 90%, and after onset, the disease is almost always fatal.

During the last half of the twentieth century, the importance of infection in the causation of disease seemed to diminish. In part, this may have resulted from reduced pathogenicity of some previously important infectious agents, e.g., streptococci, which used to cause rheumatic fever as well as kidney and cardiac complications. But decreasing attention and interest can hardly be disregarded. Thus, Helicobacter pylori as a causative agent in ulcers of the stomach was detected as late as 1990. Around the turn of the twentieth century, infectious diseases again hit the tabloid headlines due to increased pathogenicity and resistance against antibiotics.

Vitamins and the Web of Causation

In many ways, the history of the study of avitaminoses parallels the history of infectious diseases. Both topics attracted great attention around the turn of the nineteenth century, and both survived a period of negligence to resurrect on top of the list of important medical issues among lay persons aswell as the learned. Furthermore, in the history of epidemiology, both infectious diseases and avitaminoses occurred epidemically, and since their etiologies are essentially different, this caused considerable confusion. Thus, beriberi (avitaminosis B1), pellagra (avitaminosis B2), and scurvy (avitaminosis C), important diseases in the Middle Ages as well as more recently, were all at some time considered to be infectious or attributed to miasma. Also, the etiological avitaminosis research to some extent recalled black box epidemiology: The most important aim was to find measures to prevent the diseases more or less irrespective of the pathogenetic mechanisms involved.

Beriberi (meaning I cannot, i.e., work), is a polyneuritic disease that was particularly prevalent in East Asia. In 1886, Christjaan Eijkman (1858–1930) set up a bacteriological laboratory in Jakarta to find the cause of the disease (Gotfredsen, 1964). After several years of experiments on chickens, he concluded that the etiology of the disease was lack of a factor that was found in cured but not in uncured rice. To find out whether this also applied in humans, an experiment was conducted in 1906 in a mental hospital in Kuala Lumpur, Malaysia, where two different wards were given cured and uncured rice, respectively (Fletcher, 1907). The occurrence of beriberi was much lower in patients given cured rice produced in a process by which this factor was preserved. To test the alternative hypothesis, that the difference in occurrence of beriberi was caused by the dwelling, i.e., miasma, all patients in the first ward were moved to the second ward and vice versa, but still retained their diet; and the difference in occurrence persisted. When the diet was changed from uncured to cured rice, the occurrence changed accordingly.

This experiment illustrates inherent ethical dilemmas in epidemiology and public health work and would hardly be permitted today. However, its potential benefits to large numbers of people were a question of life and death. Later, in 1926, the active substance was identified and called vitamin B1, or thiamin.

Pellagra (pelle agra = rough skin) was described as a nosological entity in the eighteenth century and was considered infectious until Joseph Goldberger (1874–1929) and coworkers clarified its etiology. Pellagra was manifested by skin, nervous system, and mental symptoms. The disease occurred most frequently among the economically deprived and particularly in areas where the diet was high in maize (corn) intake. The disease was common in many areas in Europe, Egypt, Central America, and the southern states of the United States. The largest outbreak occurred in the United States from 1905 through 1915. Goldberger considered the cause of pellagra to be lack of a factor found in yeast, milk, and fresh meat. In 1937, the factor was isolated and called vitamin B2, or niacin.

Scurvy (Latin scorbutus, possibly derived from a Norse name referring to edema) was the first major disease found to be associated with a nutrient when a Scottish naval doctor, James Lind (1716–94), in 1753 published A Treatise of the Scurvy (Gotfredsen, 1964). He recommended lemon or lime juice as both a preventive and curative measure that had proved effective aboard Dutch vessels. From 1795, a daily dose was compulsory in the Royal Navy and from 1844 in the merchant navy, after which scurvy disappeared. However, being a curse during the Crusades, the disease had been recognized much earlier. In wartime, the disease caused high mortality in armies, navies, and besieged cities. But most importantly, scurvy was the disease of the sailing ships of the great exploratory expeditions. The disease was of some importance as recently as World War II. Great progress in the understanding of its etiology was made by Axel Holst (1860–1931) and Theodor Frolich (1870–1947) who, based on a series of experiments, concluded in 1907 that scurvy was an avitaminosis (Natvig, 1964; Norum and Grav, 2002). The active agent, vitamin C, or ascorbic acid, was isolated around 1930. The clarification of the etiology of rachitis illustrates the importance of interacting risk factors in the web of causation. The disease had been described as a nosological entity around 1650 and later attributed to both genetic and infectious factors. However, its prevention by cod liver oil represented the only firm etiological evidence. In the 1920s, a component derived from cod liver oil was identified and referred to as vitamin D. At the same time, an antirachitic effect of ultraviolet light was demonstrated. Only in the early 1930s, the antirachitic factor, calciferol, was isolated and the role played by ultraviolet radiation was established. Previously as in the developing world today, rachitis was and is a disease caused by vitamin D deficiency. Today in the developed world, with adequate nutrition, rachitis can still be observed, but here the disease represents a genetic metabolic disorder. A similar shift is observed in tuberculosis: In the past as in the developing world today, the whole population being infected, tuberculosis was, and still is, related to genetic susceptibility, or other cofactors such as protein deficiency. Today, in the developed world, tuberculosis is dependent on risk of infection. These examples shed light on the concept of sufficient and necessary etiological factors and illustrate how the etiological web of a disease can vary according to time and place.

Vitamins and their relevance to disease in humans were more or less forgotten after the etiology of the avitaminosis had been clarified and the preventive measures taken. However, the observation in the early 1990s case–control studies that use of folic acid very early during pregnancy significantly reduced the occurrence of congenital neural tube defects, contributed to a change of attitude. Later, it appeared that the occurrence of birth defects in general also might be reduced. Subsequent research has focused on folic acid and the relationship with homocysteine, a risk factor in cardiovascular disease. An inverse association between folic acid intake and serum homocysteine has suggested a protective affect of folic acid. Also, a protective effect in cancer has been suggested, but this is still far from clarified.

Sudden Infant Death Syndrome and Evidence-Based Recommendations

Sudden infant death syndrome (SIDS) brings us back to the beginning, to the Bible, in which an account is given of an infant smothered to death during the night by its mother. More recent sources also give information about this condition in which a seemingly completely well infant suddenly dies. In the middle of the nineteenth century, the sociologist, Eilert Sundt (1817–75) conducted an epidemiological study in which he accounted for the occurrence of SIDS in Norway.

Growing interest attached to the condition when, in the 1970s, the occurrence seemed to be increasing in many countries. However, based on mortality statistics, this trend was questioned, particularly by pathologists who claimed that the new trend was caused by increasing interest and awareness, whereby cases previously diagnosed as pneumonia, for example, now were diagnosed as SIDS by a process of transfer of diagnosis. Early epidemiological studies had established that SIDS occurred after the first week of life and throughout the first year, with a highly conspicuous peak around 3 months. When time trend studies of the total mortality in a window around 3 months of life showed the same secular trend as the alleged SIDS trend, a transfer of diagnosis was ruled out and there was a general agreement that an epidemic of SIDS was under way.

This epidemic occurred in most countries where reliable mortality statistics were available. In some countries, all infant deaths that had occurred during an extended period of time were revised on the basis of the death certificate, and it appeared that the increase in the SIDS risk had been even steeper than previously thought. Thus, in Norway, the SIDS rate increased threefold from 1 per 1000 in 1969 to 3 in 1989. Consequently, national research councils in many countries earmarked funds for SIDS research and comprehensive efforts were made to resolve the so-called SIDS enigma. Also, influential national parent organizations were set up and international associations were established for research. The increasing SIDS rates even caused general public awareness and concern.

In initial case–control studies and registry-based cohort studies, risk factors for SIDS were identified, such as low maternal age and high parity. Furthermore, social gradients existed with strong effects of marital status and maternal education. However, none of these risk factors could explain the secular increase. Even if genetic factors did not account for the increase, great concern attached to the risk of recurrence among siblings. In early case–control studies, more than tenfold relative recurrence risks had been reported, but in registry-based cohort studies, the relative risk was found to be around 5, a relief for mothers who had experienced a SIDS loss. The difference was most likely attributable to selection bias in the case–control studies; in a non-population-based case–control study, a mother with two losses would be more likely to participate than a mother with only one loss.

Eventually, infant care practices were the focus in large comprehensive case–control studies. In the late 1980s, the first evidence, observed in Germany, was published, indicating that the face-down, prone sleeping position was harmful, with a relative risk versus the supine position of around 5. Later, this finding was confirmed by studies in Australia, New Zealand, Scandinavia, Britain, and on the European continent, with far higher relative risks. The side sleeping position was also found to be harmful, as was maternal cigarette smoking, co-sleeping, non-breastfeeding, and overheating. The use of a pacifier was found to be protective.

The distribution of the information that prone (facedown) sleeping was a serious risk factor had a dramatic effect. In Norway, this information was spread by the mass media in January 1990. The SIDS rate in Norway dropped from 2.0 per 1000 in 1988 to 1.1 in 1990. In a retrospective study, it appeared that the prone (face-down) sleeping position after having continuously increased from 7.4% in 1970, was reduced from 49.1% in 1989 to 26.8% in 1990 (Irgens et al., 1995) The example again illustrates how important it is to provide scientifically convincing evidence, pivotal to bring results of epidemiological research into practice.

The SIDS experience raises important questions with respect to the reliability of recommendations given to the public based on epidemiological studies. The prone sleeping position had been recommended by pediatricians since the 1960s to avoid scoliosis as well as cranial malformations. Even though a case–control study in Britain early on in the period had observed an increased relative risk, no action was taken; again an example of the necessity to convince scientifically.

Future Directions

Even if epidemiology and particularly observational studies from time to time have been criticized and accused of giving misleading conclusions, the search for knowledge on the determinants of disease, in order to take effective preventive measures will no doubt prevail, providing an important future role for epidemiology. New challenges are life-course epidemiology, addressing major causes of adult diseases and deaths, as well as the study of interactions between environmental and genetic factors. Further progress will require the population to understand the necessity for providing data, often based on the suffering of the individual, to benefit the community and future generations, an ethical principle underlying all epidemiological research and of utmost importance to providing data necessary to the search for causes in medicine.

Bibliography:

- Aggebo A (1964) Hvorfor Altid den Hippocrates? Aarhus, Denmark Universitetsforlaget i Aarhus.

- Carpenter RG, Irgens LM, Blair P, et al. (2004) Sudden unexplained infant death in 20 regions in Europe: Case–control study. Lancet 363: 185–191.

- Dorn HF and Cutler SJ (1959) Morbidity from cancer in the United States. Public Health Monographs No. 56. Washington, DC: US Govt Printing Office.

- Eyler JM (1980) The conceptual origins of William Farr’s epidemiology: Numerical methods and social thought in the 1830s. In: Lilienfeld AM (ed.) Times, Places and Persons, pp. 1–21. Baltimore, MD: Johns Hopkins University Press.

- Fletcher W (1907) Rice and beri-beri: Preliminary report of an experiment conducted at the kuala Lumpur Lunatic Asglum. Lancet 1: 1776–1779.

- Gotfredsen E (1964) Medicinens Historie. Copenhagen, Denmark: Nyt nordisk forlag Arnold Busck.

- Hauge D (1973) Fortolkning til Samuelsbokene (In Bibelverket). Oslo.

- Henderson DA (1980) The History of smallpox eradication. In: Lilienfeld AM (ed.) Times, Places and Persons, pp. 99–114. Baltimore, MD: Johns Hopkins University Press.

- Hilts VL (1980) Epidemiology and the statistical movement. In: Lilienfeld AM (ed.) Times, Places and Persons, pp. 43–55. Baltimore, MD: Johns Hopkins University Press.

- Irgens LM (1973) Epidemiology of leprosy in Norway. An interplay of research and public health work. International Journal of Leprosy 41: 189–198.

- Irgens LM (1980) Leprosy in Norway. An epidemiological study based on a national patient registry. Leprosy Review 51 (supplement 1): 1–130.

- Irgens LM, Markestad T, Baste V, Schreuder P, Skjaerven R, and yen N (1995) Sleeping position and SIDS in Norway 1967–71. Archives of Diseases in Childhood 72: 478–482.

- Last JM (2001) A Dictionary of Epidemiology. Oxford, UK: Oxford University Press.

- Lilienfeld DE and Lilienfeld AM (1980) The French influence on the development of epidemiology. In: Lilienfeld AM (ed.) Times, Places and Persons, pp. 28–38. Baltimore, MD: Johns Hopkins University Press.

- Loudon I (2000) The Tragedy of Childbed Fever. Oxford, UK: Oxford University Press.

- Lund PJ (2006) Semmelweis – en varsler. Tidsskrift for den Norske Loegorening 126: 1776–1779.

- Mackenbach J (2005) Kos, Dresden, Utopia . . . A journey through idealism past and present in public health. European Journal of Epidemiology 20: 817–826.

- Michelsen LM (1972) Fortolkning til 1. Mosebok (In Bibelverket). Oslo Bibelverket.

- Natvig H (1964) Loerebok i Hygiene. Oslo: Liv og helses forlag. Norum KR and Grav HJ (2002) Axel Holst og Theodor Frolich. Pionerer i bekjempelsen av skjorbuk. Tidskrift for Den Norske Loegeforening 122: 1686–1687.

- Phillips B (1838) Mortality of Amputation. Journal of the Statistical Society of London 1: 103–105.

- Rothman K and Greenland S (1998) Modern Epidemiology. Philadelphia, PA: Lippincott, Raven Publishers.

- Sigerist HE (1956) Landmarks in the History of Hygiene. London: Oxford University Press.

- Statistics Norway (1961) Dodeligheten og dens a rsaker i Norge 1856–1955. Trend of motality and causes of death in Norway 1856–1955. Statistisk Sentrallgyria°, Oslo No. 10, 132.

- Warkany J (1971) Congenital Malformations. Chicago, IL: Year Book Medical Publishers Inc.