Outline

Economic evaluations in the form of decision-analytic models draw on many different types of secondary evidence in addition to costs and effects. The additional types of information used include natural history, epidemiology, quality of life weights (utilities), adverse events, resource use, and activity data. This information is drawn from different sources which can be research and nonresearch based.

Methods for identifying and reviewing evidence on randomized controlled trials (RCTs) of clinical effectiveness are well established. Some methods, many of which have not been validated, have been developed for other types of research-based information. Little guidance is available on identifying and assessing nonresearch based sources of information. On the whole, searching and reviewing methods have been developed in the context of systematic reviews and do not necessarily take into account factors specific to the task of developing decision-analytic models. This article considers how evidence is used to inform decision-analytic models and the implications of this for the methods by which evidence is identified and assessed.

The article considers the following:

- The types of information used to inform decision-analytic models for cost-effectiveness analysis.

- How to search for and to locate these types of evidence.

- How to select and to review evidence to inform a model.

- Factors specific to searching and reviewing in the context of decision-analytic modelling.

Introduction

Decision-analytic cost-effectiveness models aim to inform resource allocation decisions in health care. Evidence is assessed within a framework that reflects the complexity of the decision problem. A broad range of evidence, in addition to evidence on costs and effects, is required to support this approach. Important additional types of information include epidemiology, quality of life weights, natural history, and resource use. This information is drawn from different types of study design including experimental and observational research and from nonresearch based sources including routinely collected data, administrative databases, and experts.

A range of information retrieval methods is required to identify the diversity of information used to inform a decision-analytic models. Methods for identifying and reviewing evidence of clinical effectiveness from RCTs have been developed by organizations such as the Cochrane Collaboration. To a lesser degree, methods for the identification and review of other types of study design, such as observational studies, have been developed, although these have generally not been subject to validation and are not so well established. Very little guidance exists to support the systematic identification and assessment of nonresearch-based sources, including, for example, sources of routinely collected data such as prescribing rates. Such sources can be difficult to locate and to access, and the navigation and interrogation of their nonstandard, often complex format, can be challenging.

On the whole, searching and reviewing methods have been developed in the context of systematic reviews of clinical effectiveness. As such they have been designed to identify and to assess experimental evidence with high internal validity in order to address single, focussed questions on the effects of treatment. Systematic review search methods require extensive searching in an attempt to identify all studies that match the characteristics of the focussed review question (typically defined by the populations, interventions, comparators, and outcomes of interest). The quality of a search is defined according to its sensitivity (that is, the extent to which it has identified all studies that match the review question). The systematic review search approach has become the benchmark approach to searching in all types of health technology assessment (HTA), including decision-analytic modelling, and the concept of sensitivity has become the defining characteristic of a high quality search. Such methods, however, do not necessarily take into account factors specific to the task of developing decision-analytic models such as addressing issues of complexity, drawing on a wide range of different types of evidence and assessing effectiveness in the absence of direct or good quality evidence. This article explores how evidence is used in models and the implications of this for the methods by which evidence is identified and assessed.

Types Of Information And Forms Of Evidence: The Classification Of Evidence

Types Of Information

The need for a range of information to inform model parameter estimates is well understood. Information on clinical effect size, baseline risk of clinical events, costs, resource use, and quality of life weights have been identified as the five most common data elements required in the population of decision-analytic models.

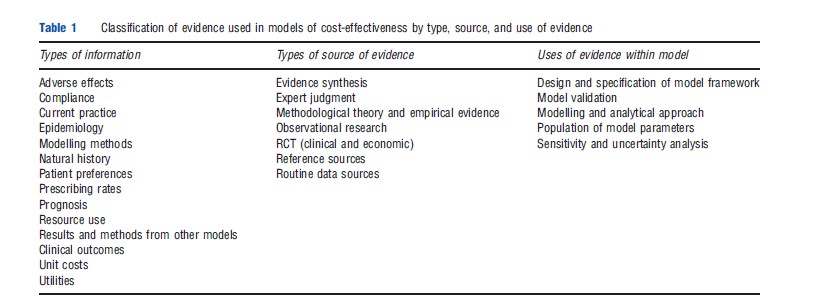

Information is also used to support a number of modelling activities in addition to parameter estimation. An analysis of the sources of evidence cited in the reporting of models identified a range of different types of information, drawn from different information sources and used for a variety of modelling activities (see Table 1). These included, in addition to model parameters, the definition of the model structure, the overall design and scope of the model, and various analytical activities and modelling methods.

The types of information used in models can be grouped into five broad categories. The first category relates to the condition or disease area of interest and includes information on natural history, epidemiology, and prognosis. Typical uses of such information include the specification of the model structure through the definition of the disease pathways and of health states within the pathway. Information is also used to provide estimates of baseline population characteristics and baseline risks of clinical events. Some evidence on costs, resource use, and utilities will also be included here.

Further information is required to inform the specification of the available treatment options and the management of the condition or disease. A range of information including clinical practice guidelines and policy documents, expert advice, prescribing rates, and other activity data can be used to inform the definition of management options including relevant comparators, treatment strategies and procedures, and management options at various stages of the disease pathway.

Category three relates to the costs and effects of the clinical interventions of interest. The types of information required include effectiveness evidence, comprising clinical outcomes, adverse effects, and quality of life weights. Adverse effects and quality of life weights often constitute additional, separate requirements where trials provide no or insufficient evidence on these outcomes. As such, searches focussing on these types of evidence might be undertaken in addition to searches for RCT evidence. Other types include information on the cost and resource use implications of delivering the interventions.

Some models might take into account factors that affect the uptake of treatment and that might impact on costs or effects outside the controlled environment of an experimental setting. Potentially relevant information includes patient preferences, adherence, or acceptance of treatment as a function of the mode of delivery of an intervention such as homed-based versus hospital-based delivery, or oral versus infusional delivery.

The final category provides analytical rather than clinically related information. This is used to support choices relating to the modelling approach and analytical methods. It can include methodological standards and guidelines, empirical, theoretical methodological research, and modelling approaches used in existing economic analyses.

Formats Of Evidence

The range of information used in decision-analytic models is drawn from a number of different forms of evidence. Examples are listed in Table 1. Although it is difficult to devise a definitive classification of the different evidence formats, it is possible to identify several study designs and formats which can be categorized broadly as research-based and nonresearch based evidence.

Research-based sources take the form of a number of different study designs, reflecting the different types of information used in models. These include evidence syntheses, including meta-analyses, systematic reviews, and cost-effectiveness models, RCTs, primary economic evaluations and observational study designs in the form of cross-sectional surveys and longitudinal cohort studies, and theoretical and empirically based methodological studies.

The use of nonresearch based sources reflects the need for models to address real world issues and to take account of the context of the decision. Such sources include expert opinion, both published and in the form of expert advice, routinely collected data and ‘reference sources.’ Routine data sources cover, for example, national disease registers (although some disease registers will be classed as observational research), life tables, and health service activity data. The category of sources referred to as ‘reference sources’ describes standard sources often providing generic information, including drug formularies or disease classifications, or sources that have some inferred authority due to consensus on their reliability or relevance to the context of the decision. Examples of the latter might include policy guidance and practice guidelines.

To some extent it is possible to associate the different types of information with the different forms of evidence. For example, it is well established that evidence of clinical effectiveness should ideally be taken from RCTs. Members of the Cochrane and Campbell Economics Methods Group (C-CEMG) have devised a useful hierarchy of evidence for the most important model data inputs, although it should be borne in mind that this does not cover all the types of information used to inform a model.

For those outcomes where there might be insufficient trial evidence, quality of life weights might be taken from cross-sectional observational studies, and evidence of adverse effects can be found in observational cohort studies and post marketing surveillance data. Epidemiological information can be drawn from longitudinal studies, including long-term routinely collected data. Observational studies can provide information on factors impacting on the uptake of the intervention. Information on the latter might also be found in qualitative studies. This is useful where an issue is considered sufficiently important to warrant some form of discussion but where quantitative data for incorporation in a model are not available.

Evidence to inform the specification and estimation of costs and resource use can be difficult to categorize. In terms of the specification of a cost analysis (i.e., the identification and definition of relevant cost and resource groups) observational costing studies, previous economic analyses, expert opinion, and documentation of how a condition is managed, such as clinical guidelines, are useful. In terms of data with which to populate a model, routinely collected data such as prescribing costs and reference sources such as drug formularies and widely accepted compilations of unit costs are preferable. Where an overall estimate of the costs and resources associated with being in a particular health state are required (e.g., the cost of managing a stroke), it is necessary to use an existing cost analysis, for example, from a previous economic analysis. It is important, however, that consideration is given to the reliability of such a summary estimate.

The type of evidence used to inform the specification for the management of the condition of interest is also difficult to categorize. The definition of disease management and the specification of current practice are complex information needs requiring information on, for example, treatment options, disease management pathways, and clinical decision-making rules or policies. This is unlikely to be satisfied using a single source of information, particularly when a model is required to reflect the variations in disease management in different countries. To represent current practice it is necessary to draw together a range of different types of information to form an overall picture or description of how a disease or condition is managed. The sources from where this information can be found might include, but will not be restricted to, treatment and practice guidelines, activity data, and expert opinion.

In addition to the different types of evidence used to inform a model, it is also important to consider the scope of evidence, within each type, that will be relevant to the model. For example, it is likely that the scope of relevant clinical effectiveness evidence will not be restricted to that which relates to the intervention(s) of interest. In the absence of direct, head-to-head trials, evidence relating to the comparator(s) of interest should also be sought in order that indirect comparisons can be undertaken. This scope of evidence will be further extended where mixed treatment comparisons are undertaken. Here, a network of evidence, including trials of treatments that are not formal comparators, is required. The scope of relevant evidence will be further determined by the breadth or scope of the framework within which a decision problem is analyzed. Although the purpose of some models is to assess the cost-effectiveness of an intervention or interventions at a specific point in the disease pathway, this is done in the context of the whole of the disease pathway. Therefore, the effectiveness and cost-effectiveness of an intervention may need to be considered in terms of its impact on the whole of the disease pathway. For example, the effectiveness of a diagnostic intervention will be considered not just in terms of its diagnostic accuracy but on the extent to which it impacts on the longer term management of a condition and, ultimately on the clinical endpoints relevant to the condition, including survival and quality of life. As such, evidence on, for example, treatment effects, quality of life weights, costs, and resource use is required not just for the intervention of interest at the point of the decision but for all important management options and health states at all stages of the disease pathway. This is an important consideration in defining the scope and range of searches to be undertaken.

Searching For And Locating Evidence

Models have multiple information needs that cannot be satisfied by a single search query. A series of focussed, iterative search activities should underpin the development of a model. To identify the diversity of evidence required, it is necessary to access a range of different types of information resources and to adopt a number of different information retrieval techniques.

Search methods for the retrieval of RCT evidence of clinical effectiveness are well documented by organizations such as the Cochrane Collaboration and are not covered in depth here. Bibliographic databases remain important sources, particularly for the identification of research-based evidence. However, given the diversity of information required, the totality of the evidence base used to inform any single model will be fragmented. In particular information from nonresearch based sources is scattered and the location and format of these sources can make retrieval difficult.

General biomedical bibliographic databases such as Medline and Embase provide access to a substantial volume of published information and can be interrogated using subjectspecific keyword search techniques. Search filters, which are predefined combinations of keywords designed to identify specific study designs or types of information, are useful in identifying the individual types of information required for a model. For example, a number of filters exist for the retrieval of RCTs and cost information. Filters exist for many types of information. Most have been designed pragmatically and few have been validated. Nonetheless, filters are widely used to improve the relevance of search strategies. The Information Specialists’ Sub-Group of Inter Technology Assessment Consortium, the UK academic network undertaking HTA for the National Institute for Health and Clinical Excellence (NICE), have developed an extensive resource of critically appraised search filters. In terms of non-RCT evidence, the Campbell and Cochrane Economic Methods Work Group provides advice on searching for economic evaluations and on quality of life weights. Research on the retrieval of evidence on adverse events is currently being undertaken at the University of York in the UK. These sources provide advice both on searching general biomedical databases and on specialist resources particular to specific topic areas.

Some useful specialist databases and resources exist for the retrieval of research-based non-RCT evidence. The Centre for Reviews and Dissemination at the University of York provides access to the National Health Service Economic Evaluation Database (NHS EED) and the HTA database, with the latter including the register of projects and publications of the member organizations of International Network of Agencies for Health Technology Assessment (INAHTA). The TUFTS Cost-Effectiveness Analysis Registry extracts utilities data from systematically identified cost-utility analyses. The Health Technology International (HTAi) Vortal is an extensive searchable repository of resources relating to the field of HTA.

Routine data and reference sources are disparate and cannot easily be brought together as a single reliable resource that can be interrogated using a uniform search approach. Compilations of resources have not been developed to any great degree, although some of the sources aformentioned can provide some coverage. Part of the value of this type of information is its relevance to the decision-making process in terms of geographical context or relevance to a specific decision-making authority. For example, the British National Formulary, the Office for National Statistics and NHS Reference Costs constitute three highly relevant sources for informing model parameter estimates in England and Wales, but do not carry the same authority in other decision-making jurisdictions. It would, therefore, be difficult to develop an international, generic, and comprehensive resource. Organizations such as the World Health Organization and the Organization for Economic Cooperation and Development can provide access to useful country-specific information. International resources such as HTAi and the International Society of Pharmacoeconomics and Outcomes Research (ISPOR) also provide useful starting points. At a national or more local level, harnessing knowledge and skills in order to provide access to compilations of information sources relevant to specific, local decision-making is an important area for development.

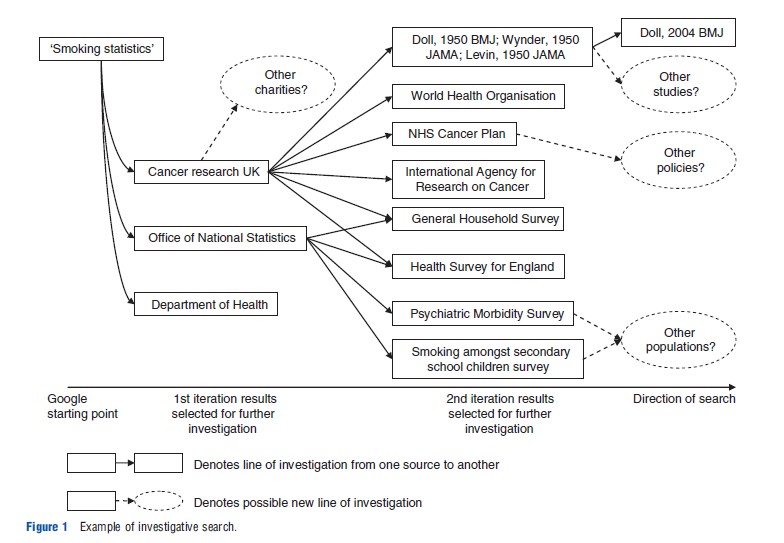

In the absence of such resources, the systematic identification of routine and reference sources of information cannot rely on the traditional keyword searching approach commonly associated with systematic review search methods. An alternative approach to retrieving information is to follow systematic lines of enquiry. This investigative search approach is similar in principle to citation pearl growing and snowballing Bibliography:, used as supplementary search techniques in systematic reviews. Investigative searching does not attempt to identify everything in one search attempt using a single, catchall, search query. Rather, the objective is to retrieve information in a systematic, auditable, and piecemeal fashion. This is done by identifying one or more relevant starting point(s), to search and to use these to identify relevant leads which can be followed up systematically and iteratively until sufficient information has been identified. A search starting point could be a known publication, advice from an expert or highly focussed exploratory searches using a bibliographic database (e.g., Medline) or an internet search engine (e.g., Google). The repeated following up of leads, known as proximal cues, in the form of new relevant keywords, Bibliography: and names of authors and organizations will form a network of sources for further investigation. An illustration of a brief investigative search is given in Figure 1.

In terms of obtaining information from experts, the potential for bias is high and it is important to demonstrate a transparent and systematic approach to gathering this type of information. Formal methods of eliciting information from experts include Bayesian elicitation methods, qualitative operational research techniques, and consensus methods. Other forms of good practice include the use of a range of experts in order to capture variation in opinion and in clinical practice. Precautions should also be taken to ensure that the full range of clinical expertise relevant to the decision problem is represented.

How To Search Efficiently

Searches using a systematic review search approach typically attempt to achieve a high-level sensitivity. That is, they are designed to maximize the retrieval of evidence that matches a prespecified and focussed topic. This often necessitates the retrieval of a large volume of irrelevant information in order to ensure that relevant evidence is not missed by the search. Exhaustive searching, aimed at high sensitivity, is considered difficult in the context of modelling. Limited time and resources are frequently cited as constraints when undertaking searches for models. The process of model development generates multiple, interrelated information needs that have, to some extent, to be managed simultaneously and iteratively rather than sequentially. This is very different from the single focussed question scope of typical systematic review searches.

To progress the process of model development it is necessary to assimilate a broad range of information as efficiently as possible. Using techniques that focus on precision, rather than sensitivity, might provide a more efficient approach to searching. Precision is defined as the extent to which a search retrieves only relevant information and avoids the retrieval of irrelevant information. Search techniques that focus on precision can provide a means of maximizing the rate of return of relevant information. The objective of such techniques is to front-load the search process by attempting to capture as much relevant information as early as possible, and to assess the diminishing returns of subsequent, broader, search iterations. Such techniques do not preclude further iterations of extensive searching where a more in-depth approach is required. All the techniques described aim to maximize precision. They are suggested with the caveat that when used on their own without subsequent broader iterative searching there is an increased risk of missing potentially relevant information.

A search process aimed at maximizing the rate of return of relevant information and at minimizing the opportunity cost of managing irrelevant information can adopt widely used information retrieval techniques. Restricting searches to specific fields within bibliographic databases is a commonly recognized technique aimed at maximizing precision. For example, searching for relevant terms within the title of journal articles should minimize the retrieval of irrelevant information. Depending on the nature and amount of information retrieved, a judgment can be made as to whether to extend the search across other fields, such as the abstract, with a view to increasing sensitivity. A similar technique can be adopted when using search filters to restrict search results to specific study designs. Search filters can be designed to maximize either the sensitivity or precision of this restriction. The choice of high precision filters, sometimes referred to as one-line filters, can be used to maximize relevance. The Hedges project at McMaster University in Canada has developed and tested a set of filters, including one-line filters.

A highly pragmatic approach is to restrict the number of sources or databases searched. If a decision is made to extend the search, the results of the first iteration can be used to select and follow up highly relevant lines of investigation, for example, by carrying out focussed searches of newly identified keywords or by following up key authors.

Existing cost-effectiveness models in the same disease area may be important sources of information, and can be used to gain an understanding of the disease area, to identify possible modelling approaches and to identify possible evidence sources with which to populate a model. This can be described as using a ‘rich patch’ or ‘high yield patch’ of information whereby one source of information (i.e., the existing economic evaluation) is drawn on to satisfy multiple information needs. The use of high yield patches can provide useful shortcuts or can help cover a lot of ground quickly in terms of gaining an understanding of a decision problem. However, it is important that consideration is given to the limitations or reliability of a potentially rich source and to ensure that the weaknesses of existing economic analyses are not simply being replicated.

It has already been stated that information needs do not arise sequentially but that multiple information needs might be identified at the outset of the modelling process or might arise simultaneously during the course of developing the model. A useful way of handling multiple information needs is to consider information retrieval as a process of information gathering alongside a more directed process of searching. The pursuit of one information need might retrieve information relevant to a second or third information need. The yield of this secondary or indirect retrieval can be saved and added to the yield of a later more directed retrieval process. For example, a search for costs might also retrieve relevant quality of life information. This would constitute secondary or indirect information retrieval. It could be retained and added to the yield or results of a later search focussing specifically on quality of life.

Sufficient Searching

There is some debate as to what constitutes sufficient searching in the context of decision-analytic modelling. In particular, the need to achieve a high level of sensitivity when undertaking searches is open to question both on practical and theoretical grounds. The NICE has developed useful principles for the searching and reviewing of evidence for decision-analytic models. In terms of searching, this advice states that ‘‘the processes by which potentially relevant sources are identified should be systematic, transparent and justified such that it is clear that sources of evidence have not been identified serendipitously, opportunistically or preferentially.’’ To uphold this principle it is important to consider the factors that might influence decisions to stop searching and that might inform judgments as to whether a sufficient search process has been undertaken.

This section summarizes some of the factors that could contribute to a definition of sufficient searching in the context of modelling and that might be used to support a judgment that sufficient searching has been undertaken.

In practical terms, it is often argued that there is not sufficient time or resource to undertake exhaustive, systematic review-type searching for every information need generated by the model. This supports the need for efficient methods such as those described in the previous section. It also requires pragmatic decisions on when to stop searching. Such decisions should be transparent in order that users of a model can judge the perceived acceptability or limitations of the scope of the searches undertaken.

Models are usually required to inform decision-making in the absence of ideal evidence. A decision to stop searching might be driven by an absence or lack of relevant evidence. If a relatively systematic search process exploring a number of different search options has retrieved no relevant evidence, it could be considered acceptable to assume that further extensive searching would not be of value.

One-way sensitivity analysis can be used to explore the implications of decisions to stop searching and of using a range of alternative sources of evidence on the outputs of a model. This type of analysis is widely regarded as a useful means of supporting judgments underpinning the identification and selection of evidence and is stated as a requirement in the Methods of Appraisal issued by NICE.

An extension of this idea would be to undertake some form of value of information analysis to understand the impact of uncertainty in the model and ultimately on the decision-making process. The process of bringing together, within one framework, multiple and diverse sources of evidence bring with it unavoidable uncertainty that cannot fully be understood or removed by comprehensive searching on every information need within the model. On theoretical grounds, therefore, it could be argued that exhaustive searching would not fully inform an understanding of uncertainty. Value of information analysis might be useful in assessing the value of undertaking further searching for more evidence and in determining where search resources should be focussed. It could act as a device to prioritize areas for further rounds of searching during the course of a modelling project or to develop research recommendations for more indepth searching or reviewing on specific topics to inform future models. It is important to note, however, that the usefulness of this approach is dependent on timing and the extent to which the priorities for searching are revisited during the course of a project. A particular parameter which appears unimportant during the early stages of model development may become more important as the model is further refined over time.

Reviewing And Selecting Evidence

Reviewing techniques used in systematic reviews are, in principle, applicable in the context of reviewing and selecting evidence for decision-analytic models. However, due to certain factors, gold standard systematic review methods such as those of the Cochrane Collaboration, are not directly transferable and consideration has to be given to adapting these useful and well established methods for modelling. These factors include time and resource constraints, the need to balance quality of evidence with relevance to the context of the decision and the frequent need to accommodate a lack of available, relevant evidence.

Time And Resource Implications

As in the case of searching for information, reviewing the breadth and diversity of evidence used in models is typically constrained by limited time and resources. The value of indepth reviewing for every information need in the model, such as the use of strict inclusion criteria, extensive quality assessment procedures, and independent reviewing by two reviewers, is also open to question. Various pragmatic rapid review methods can be applied including reduced levels of data extraction, quality assessment, and reporting. In addition, important aspects of the model can be identified and prioritized as requiring a greater proportion of the available reviewing resource.

Assessing The Quality Of Evidence

The systematic assessment of the quality of studies considered for inclusion in a systematic review is one of the many mechanisms aimed at checking for and minimizing the risk of bias. Quality assessment tools, sometimes in the form of checklists, exist for many different types of study design including RCTs, observational studies, and economic evaluations. The tools provide a series of questions on the conduct and design of individual studies allowing the systematic consideration of the strengths and weaknesses in terms of reliability, validity, and relevance. Many different checklists exist. Organizations such as the Centre for Reviews and Dissemination at the University of York provide access to a range of checklists. The Cochrane Collaboration, however, recommends against the use of checklists arguing that this leads to an oversimplification of the quality assessment process. The Collaboration has developed the Cochrane Risk of Bias tool, which can be applied to both RCTs and nonrandomized studies.

Given the diversity of information used to inform models it is highly likely that quality assessment tools do not exist for every type of evidence used. In such circumstances it might be possible to generate a number of questions, possibly based on existing tools, to guide the process of assessment. Members of the Cochrane and Campbell Economic Methods Working Group suggest a number of issues for consideration in the assessment of evidence on quality of life weights. The absence of standards for quality assessment is a particular problem in the assessment of routine data sources and reference sources. The quality of commonly used sources, such as national statistical collections, classifications of disease, and drug formularies might be regarded as sufficiently authoritative to be accepted without in-depth quality assessment. The ISPOR has created a Task Force on Real World Data to consider issues, including quality, relating to the use in models of routinely collected data and data not collected in conventional RCTs.

Two tools have been developed that can be used to support the process of quality assessment specifically in the context of decision-analytic models for cost-effectiveness. The tool devised by members of the Cochrane and Campbell Economic Methods Working Group is a series of hierarchies of evidence for five important model data inputs: clinical effect sizes, baseline clinical data, resource use, unit costs, and quality of life weights. Although the hierarchies do not allow the assessment of the quality of each individual study, they form a useful tool for some form of assessment between studies. The Grading of Recommendations Assessment, Development, and Evaluation (GRADE) system provides an ‘economic evidence profile’; a framework and criteria for rating the quality of evidence collected from all potential sources relating to all components that may be used to populate model parameters, including research-based and nonresearch-based sources.

Assessing The Relevance Of Evidence

The relevance of evidence to the context of the decision is a crucial consideration in assessing evidence to inform decisionanalytic models. This is an important distinction in the assessment of evidence for models compared with assessment for systematic reviews. Although standard quality assessment tools used in systematic reviews often include questions relating to relevance, the focus, in reality, is on the assessment of scientific rigor and internal validity. In its consideration of the role of routine data sources the ISPOR Real World Data Task Force emphasizes that ‘context matters greatly’ in determining the value of available sources. The C-CEMG hierarchies and the GRADE system both place emphasis on factors of relevance directly alongside the assessment of scientific quality. In assessing the value or usefulness of nonclinical sources of evidence, the trade off between contextual relevance and internal validity is often a particularly important consideration.

The tension between relevance and scientific quality is highlighted by the role of expert judgment as a source of evidence. As a basis for parameter estimates, expert judgment is placed at the bottom of the evidence hierarchy, as in systematic reviews. However, the role of expert judgment in interpreting the available evidence and in assessing the face validity or credibility of a model as an acceptable representation of the decision problem is recognized as an important source in the validation of the model.

Selecting Evidence For Incorporation In The Model

The assessment of quality and relevance plays an important part in the selection of evidence for use in a model. Although some initial form of eligibility criteria might be used to select Bibliography: from a list of search results, this does not take the form of strict, predefined inclusion and exclusion criteria. This may be for a number of reasons. The incorporation of nonclinical evidence in a model tends not to involve the synthesis of data from a number of studies to generate a single pooled estimate. Rather, a range of estimates may be derived or selected from a number of possible options all of which might be relevant for different reasons. In addition, there is often an absence of ‘ideal’ evidence. Although a clinical effectiveness review can remain inconclusive due to there being no available evidence, this is not an option for decision-analytic models which have to support a decision-making process regardless of the available evidence base. In doing so, the purpose of a decision model is to identify what might be, on average, the best option and to quantify the uncertainty surrounding the decisions. In the absence of ideal evidence the application of more flexible selection criteria allows the identification of the best available evidence from which the best or closest match can be judged and selected.

Therefore, evidence is not defined as being eligible or ineligible. Rather, the various characteristics of individual sources can be seen as offering different attributes by which eligibility might be judged. These attributes may be different to those of another source; that is, sources will be judged as being useful for different reasons. The quality-relevance tradeoff is an example of this.

The application of more flexible selection criteria allows the identification of a relatively varied set of potentially relevant or candidate evidence from which final selections can be made. The selection of evidence for incorporation in a model is made through a process of weighing up the attributes of each source against each other. The assessment of quality and relevance, sometimes supported by some level of data extraction to aid comparisons between studies, supports this process. This is different to the process of quality assessment and data extraction in standard systematic reviews which take place after evidence has been selected for inclusion. Moreover, the selection of one source of evidence over another does not necessarily lead to the exclusion of evidence as the implications of selecting alternative sources can be explored through sensitivity analysis.

The use in a standard systematic reviews of predetermined, strict selection criteria is another mechanism aimed at minimizing the risk bias in the review. The more flexible approach used in modelling incorporates choices based on weighing up and trading off the relative merits of alternative sources of evidence. This avoids the need to label evidence as being relevant or not relevant, allows the inclusion and exploration of a range of sources of evidence and, in the absence of ideal evidence, permits the incorporation of the best available evidence. In adopting this approach it is important that the process of selecting evidence is transparent, that the choices made are justified and that the extent and impact of uncertainty caused by possible bias resulting from the selection process is accounted for through sensitivity analysis.

A number of procedures can be used to systematize the process of selection. The use of quality assessment and data extraction can help systematize the choices being made. The reporting of the process, including the tabulation of key characteristics of the candidate sources of evidence, can improve transparency. The process of selection can be done through systematic discussion within the modelling project team in order that joint decisions are made. Finally, sensitivity analysis can assess the impact of uncertainty generated by the process of selection, including exploration of alternative sources not used in the base case analysis.

Conclusion

Decision-analytic models assess cost-effectiveness within a complex analytic framework. A broad range of information, in addition to evidence on costs and effects, and including both research and nonresearch-based information, is required to inform this approach.

Information retrieval and reviewing methods, developed for the conduct of systematic reviews of clinical effectiveness, can be used for the identification and assessment of evidence used to inform decision-analytic models. To make the best use of these methods it is necessary to adapt them to the specific requirements of the task of developing decision-analytic models. This includes employing a range of information retrieval techniques in order to access and exploit diverse formats of evidence and adapting quality assessment and data extraction procedures to support systematic and transparent judgments in the selection of the best and most relevant available evidence.

Decision-analytic models permit an evidence-based approach to decision-making that takes account of the complexities and factors of specific relevance to the context of the decision problem. The collective grouping and interplay of diverse sources of evidence brings with it inevitable uncertainty. This cannot wholly be addressed by minimizing the risk of introducing forms of bias that might be associated with individual sources of evidence. Searching and reviewing methods used in the context of developing a model can provide an efficient means of capturing and understanding a broad range of information and can be used to address systematically and transparently the limitations of the best available relevant evidence such that a full account of the uncertainty associated with that evidence base can be supported.

Bibliography:

- Centre for Reviews and Dissemination (2008). Systematic reviews: CRD’s guidance for undertaking reviews in health care, 3rd ed. York: CRD, University of York.

- Cooper, N., Coyle, D., Abrams, K., Mugford, M. and Sutton, A. (2005). Use of evidence in decision models: an appraisal of health technology assessments in the UK since 1997. Journal of Health Services Research & Policy 10, 245–250.

- Cooper, N. J., Sutton, A. J., Ades, A. E., Paisley, S. and Jones, D. R. (2007). Use of evidence in economic decision models: Practical issues and methodological challenges. Health Economics 16, 1277–1286.

- Egger, M., Davey, G. and Altman, D. G. (eds.) (2001). Systematic reviews in health care: Meta-analysis in context, 2nd ed. London: BMJ.

- Garrison, L. P., Neumann, P. J., Erickson, P., Marshall, D. and Mullins, D. (2007). Using real-world data for coverage and payment decisions: The ISPOR real-world data task force report. Value in Health 10, 326–335.

- Glanville, J. and Paisley, S. (2010). Identifying economic evaluations for health technology assessment. International Journal of Technology Assessment in Health Care 26, 436–440.

- Golder, S. and Loke, Y. (2010). Sources of information on adverse effects: A systematic review. Health Information and Libraries Journal 27, 176–190.

- Golder, S., Glanville, J. and Ginnelly, L. (2005). Populating decision-analytic models: The feasibility and efficiency of database searching for individual parameters. International Journal of Technology Assessment in Health Care 21, 305–311.

- Higgins, J. P. T. and Green, S. (eds.) (2011). Cochrane handbook for systematic reviews of interventions: Version 5.1.0 (updated March 2011). Available at: https://training.cochrane.org/handbook.

- Kaltenthaler, E., Tappenden, P., Paisley, S. and Squires, H. (2011). Identifying and reviewing evidence to inform the conceptualisation and population of cost-effectiveness models. Nice DSU technical Support Document: 13. Sheffield: NICE DSU.

- Paisley, S. (2010). Classification of evidence in decision-analytic models of cost-effectiveness: A content analysis of published reports. International Journal of Technology Assessment in Health Care 26, 458–462.

- Shemilt, I., Mugford, M., Vale, L., Marsh, K. and Donaldson, C. (eds.) (2010). Evidence-based decisions and economics: Health care, social welfare, education and criminal justice, 2nd ed. London: Wiley-Blackwell.

- Weinstein, M. C., O’Brien, B., Hornberger, J., et al. (2003). Principles of good practice for decision analytic modeling in health-care evaluation: Report of the ISPOR task force on good research practices-modeling studies. Value in Health 6, 9–17.